Stability AI, creator of Stable Diffusion and DreamStudio, recently announced that it had secured US$101 million in a fundraising round to support the creation of open-source systems. Leading the investment round were O’Shaughnessy Ventures LLC, Coatue, and Lightspeed Venture Partners. The London-based company will use these funds to accelerate the development of open AI models for language, image, audio, 3D, video, and for consumer and global enterprise use cases.

Unveiled in August, Stable Diffusion is an open-source text-to-image generator similar to OpenAI’s DALL-E. Like most of its contemporaries, it promises to make it possible for billions of people to produce beautiful art instantly. The model itself draws inspiration from the work of CompVis and RunwayML, a video editing business well-known for its widely used latent diffusion model, as well as ideas from Katherine Crowson, lead generative AI developer at Stability AI, who developed conditional diffusion models, Dall-E 2 by OpenAI, Imagen by Google, and academics at Ludwig Maximilian University of Munich.

With the debut of independent research lab Midjourney’s self-titled product in July and OpenAI’s DALL-E 2 in April, AI image generators have become increasingly popular this year. In May, Google also unveiled Imagen, a text-to-image technology that is not yet accessible to the general public.

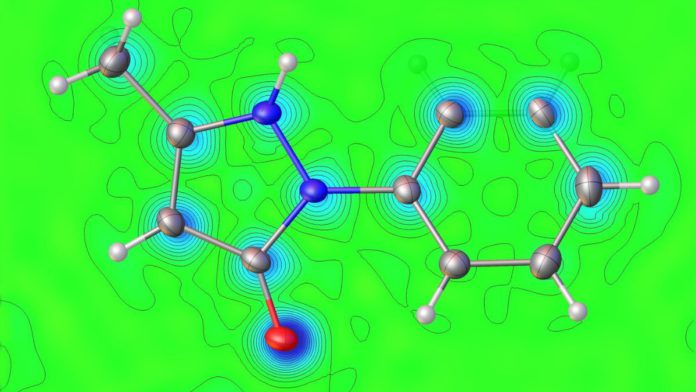

Despite being an image generator, Stable Diffusion does not leverage the auto-regressive method utilized by systems like DALL-E 2. To generate visual output, auto-regressive algorithms employ probability distributions. Stable Diffusion creates visuals using latent diffusion models (LDMs). The latent diffusion model employs diffusion algorithms but reconstructs the image rather than just compressing it. Images are created in this scenario by denoising data from neural networks known as autoencoders in a latent representation space, which is the information required to represent particular data that is embedded closely together. The whole image is then created by decoding the representation.

Because Stable Diffusion is open source, users can get over any prohibitions that are in place, unlike DALL-E and Midjourney, which have measures in place to prohibit the creation of graphic or pornographic images. The open source designation distinguishes it from its competitors because Stability AI has made all the information about its AI model, including the model’s weights, available for anybody to read and use.

Stability AI’s 4,000 A100 Ezra-1 AI ultracluster was used to train the model. With more than 10,000 beta testers producing 1.7 million photographs every day, the company has been pushing the model through extensive testing.

The main dataset was trained using LAION(Large-scale Artificial Intelligence Open Network)-Aesthetics, a subset of LAION-5B that was constructed using a new CLIP-based model that filtered LAION-5B based on the scores of Stable Diffusion’s alpha testers for how “beautiful” a picture was. Stable Diffusion can quickly produce 512 x 512-pixel images on consumer GPUs with less than 10 GB of VRAM. This revolutionizes image production by allowing researchers and, eventually, the general public to use the tool in a number of settings.

Read More: Microsoft introduces DALL-E 2 with Designer and Image Creator

On the grim side, private medical information and copyrighted works were both included in the dataset used to train Stable Diffusion. Fearing copyright infringement lawsuits, Getty Images prohibited the submission of content created by systems like Stable Diffusion. Even U.S. House Representative Anna G. Eshoo recently criticized stability AI in a letter to the National Security Advisor (NSA) and the Office of Science and Technology Policy (OSTP), urging them to address the release of “unsafe AI models” that do not filter the content posted on their platforms.

DreamStudio, a new suite of generative media tools built to allow everyone the power of infinite imagination and the seamless simplicity of visual expression through a mix of natural language processing and novel input controls for rapid creation, is another Stability AI’s consumer-facing product. Stability AI also offers financial support to an organization called Harmonai. Late in September, Harmonai unveiled Dance Diffusion, an algorithm and collection of tools that can create musical clips by learning from hundreds of hours of pre-existing music.