On Tuesday, the Linux Foundation announced an open-source association wholly dedicated to the advancing open standards that supports the promotion of AI-enabled voice assistance systems named open voice network.

The Linux Foundation aims to build a sustainable ecosystem along with open-source projects to speed up technological development and commercial adoptions. The open voice network’s role is to prioritize trust and interoperability in voice-based applications and support fostering AI-enabled voice assistance systems in the digital future.

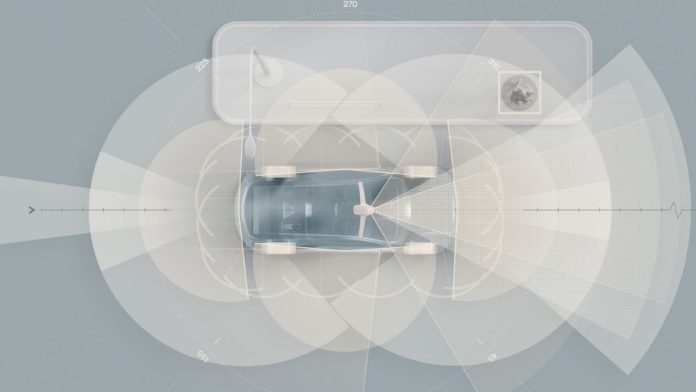

The artificial intelligence-generated voice assistance systems are primarily based on automatic speech recognition, advanced dialog management, natural language processing, and machine learning. The open voice network has set a few guidelines on them to ensure the voice assistance’s security.

Read more: Aundril Raises $450M At The New Series D

“Having an open network through Open Voice Network for education and global standards is the only way to keep pace with the rate of innovation and demand for influencer marketing,” said Rolf Schumann, the chief digital officer at Schwarz Gruppe(one of the founding members).

They have set three significant guidelines to ensure a secured voice assistance system: to research and recommend global standards that qualify the user choice, inclusivity, and trust. To identify and share conversational AI practices specific and inlined with industries that serve as the source of insight and value for voice assistance systems. And the last one, for the enterprises to work along with the existing industry associations on relevant regulatory and legislative issues.