Have you seen the video of Barack Obama calling Donald Trump a ‘complete dipshit.’ It wasn’t a real video. Instead, it was a deepfake video that allows users to manipulate an existing video or image by replacing it with someone else’s voice and facial features. Recent advances in AI have led to a rapid increase of deepfake apps and videos in the public domain.

The deepfake technology uses deep learning, AI, and a Generative Adversarial Network or GAN to create videos or images that are actually fake. It involves training auto-encoders or generative adversarial networks.

Although creating a realistic deep fake video on mobiles and conventional computers is challenging, various deep fake apps and websites are available to create hilarious images or videos that don’t violate anyone’s privacy.

Here are the 10 best deepfake apps and websites you can experiment with for creating funny videos and images:

1. Reface

Reface is one of Android’s most popular deepfake apps that allows users to impose their face on photos, videos, and GIFs. The built-in system will enable users to create the most realistic fake pictures and videos. It has the most convincing results, and your friends and family won’t even know that it’s fake. You can share your creations on social media and see their reaction.

It was nominated for the Google Play user’s choice awards in 2020. Reface also has a gender swap feature where you can see how you would look in another gender.

2. ZAO

Zao is a Chinese deepfake app that became viral throughout the web for its face-swapping feature. Just like Snapchat’s face swap filter, ZAO allows two people to switch their faces, which can create hilarious results. You can use ZAO to immerse your face in your favorite TV shows and movies. This free deepfake app only needs one photo to explore thousands of trendy clothing, hairstyles, and makeup.

This application quickly became somewhat controversial due to its updated privacy problems, creating a massive backlash throughout the web. The creators of the application changed their privacy policy. The creators had the rights to the images inserted into the app. This basically means that if you add your face to the application, you can no longer stop the creator from using your face for whatever purpose they like. ZAO has responded to concerns and has since adapted its original privacy policy.

3. Faceswap

Faceswap is a top deepfake app where you can create the funniest and most amazing photos that’ll surprise all your friends and acquaintances. Besides delivering accurate results, the critical feature of this app is an easy interface with numerous functions. The most outstanding quality of the Face Swap deepfake app is its ability to match animals’ faces. You can have a brave tiger or a cute kitty face swapped with yours.

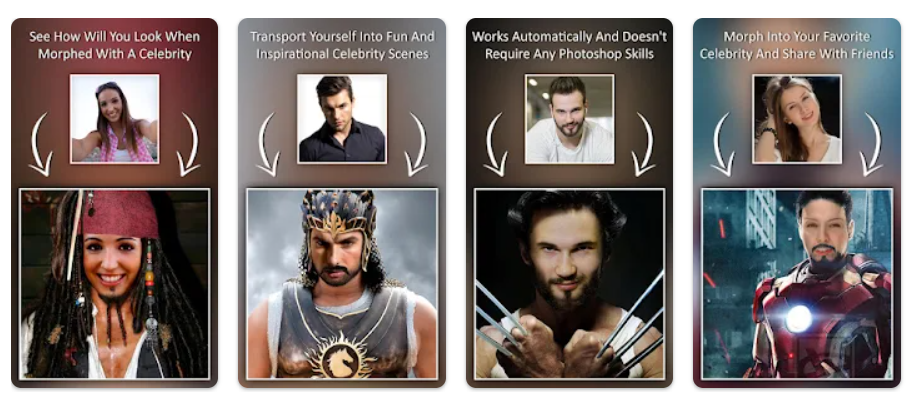

4. Celebrity Face Morph

If you’re a huge fan of Wolverine, Jack Sparrow, SuperMan, or any other celebrity figures, Celebrity Face Morph is the perfect deepfake app for you. You can choose a character from an enormous list and morph a snap of your face into them. The list includes sports celebrities, movie stars, animals, and politicians. The Celebrity Face Morph deepfake app uses advanced AI neural networks and a powerful image recognition technology for instant morphing with no Photoshop skills.

5. Collage.Click Face Switch

Collage.Click Face Switch allows users to swap their face and claims to be the best face swap app on smartphones. Regular face swap deepfake apps use a circle to capture your facial features and place them in another image, GIF, or video. Whereas Collage.Click will also copy the facial features and place them naturally into another GIF, image, or video. The Collage.Click Face Switch deepfake app is available for both Android and iOS devices for free.

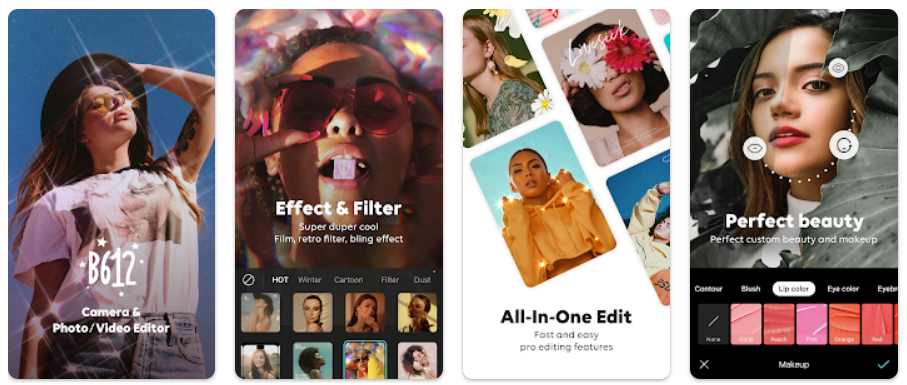

6. B612

B612 is a famous photo editor app that has various editing functions and filters. However, B612 also allows users to create a deepfake image. You only have to capture a picture with your camera, and the app will combine your face with a selected photo. Since it’s a photo editing app, you can edit the resulting photo with dozens of different effects and filters.

7. Wombo

Wombo is an AI-powered deepfake app for lip sync where you can transform any face in a still image into a singing face. There is a list of songs for users to make any face in a photo to sing it. The app uses AI technology to create a deepfake singing video that seems animated and not realistic. Once you download and launch Wombo, add a selfie, and pick a song. The app will work its magic, and you’ll see your selfie transformed into a quirky singing selfie.

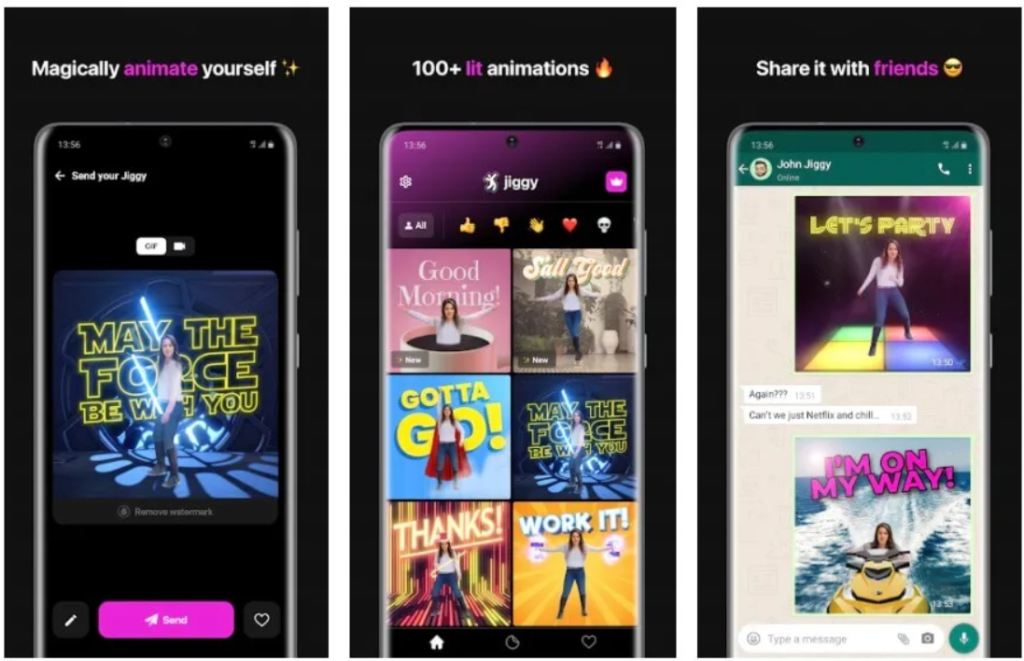

8. Jiggy

Jiggy is another deepfake maker for creating fake but fun content on social media. With Jiggy, you can animate still images with stickers, GIFs, or videos. You can use this bizarre and fun content to prank your friends or family. Besides a full-body swap, you’ll find over 100 GIFs and unique dances to animate your photos and share them among your friends.

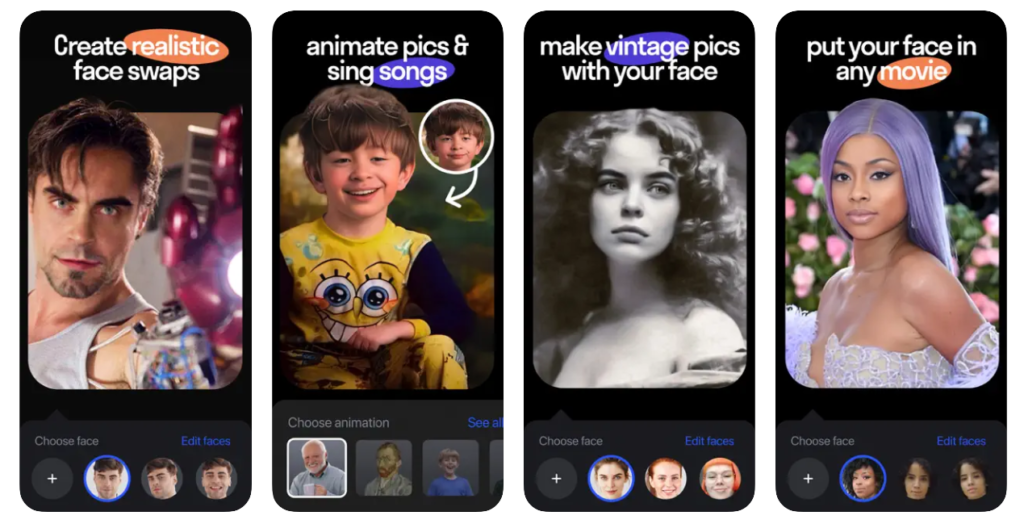

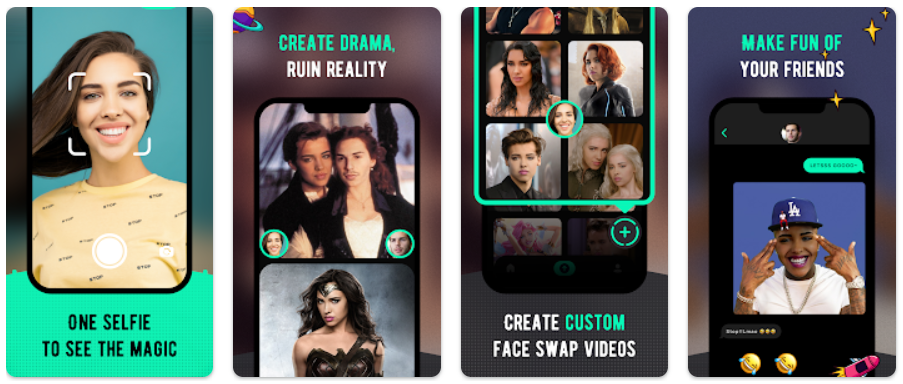

9. Facemagic

FaceMagic is a deepfake video maker that allows you to change faces in any video, image or GIF in a few simple steps. With Facemagic deepfake video app, you get funny faces in any video or GIF in a matter of seconds. You only have to upload a photo from your gallery and the AI-powered FaceMagic deepfake app will do its magic. Besides face swap, you can even make the photo dancing, morph your face or friend’s face into celebrities, and swap gender.

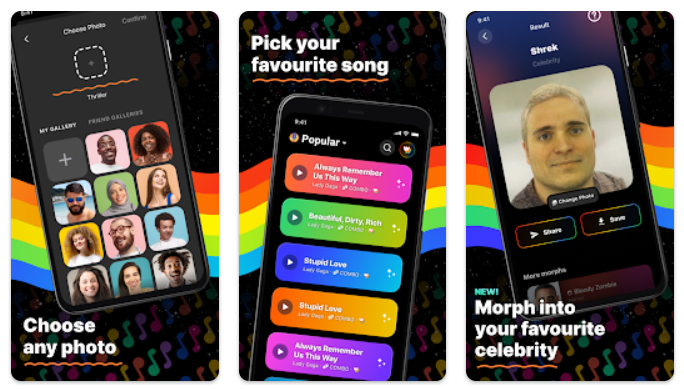

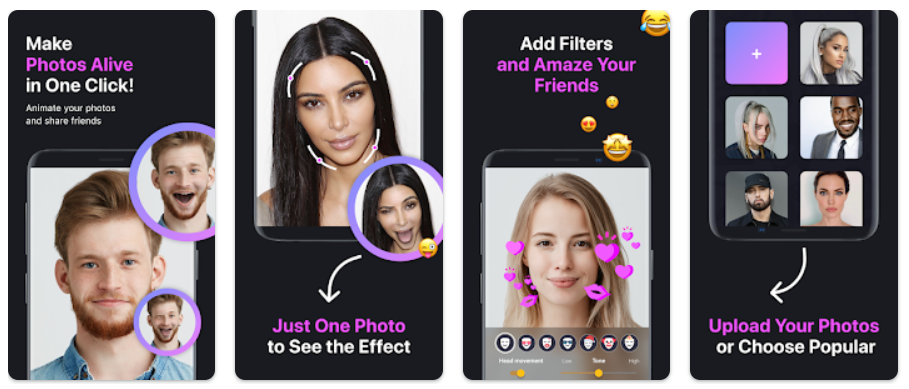

10. Anyface

Anyface is a deepfake generator to surprise your friends with an unusual look. Anyface animates your photos and makes them alive. All you have to do is choose a selfie or photo, and Anyface will work its magic. This deepfake application is loaded with fun features where you can also bring the photos to life and make them talk. You can create talking photos with pre-loaded phrases and also add beautiful filers to improve the image. It has a straightforward interface that doesn’t require users to have any editing skills because it includes easy-to-use tools.