Paraphrasing generators are advanced AI tools that help many writers. So, how does one go about using it?

Paraphrasing tools are a life-saving device for many writers. Removing plagiarism, altering content tone, and rewriting content within seconds are all common traits and reasons for their usage. That’s why their employment in recent times has increased significantly.

When around 80% of college students admit that plagiarizing content is a part of their routine, such tools are even more important to use. Thus, using them is being taught at academic and professional levels.

Today, we’ll understand what paraphrasing tools are and why you must use them. But, most importantly, we’ll be looking at how you should use an AI-based paraphraser. So, let’s begin:

Understanding A Paraphrase Generator: Defining Traits

A paraphrase generator is an AI-enthused tool used for various purposes. Now, an AI-based paraphrasing tool can be software that helps with the writing process. It can be used by copywriters to rephrase content or by students to rewrite essays.

These paraphrase tools are designed to assist and make the writing process more manageable. However, paraphrasing tools are one of the most popular types of AI-based tools. These AI-based assistants even generate content ideas at scale.

But, their primary usage is to recreate or revamp content when needed. To sum it up, here are some of the common elements provided by paraphrasers:

- Rewriting content quickly

- Finding alternative synonyms and phrases to describe the same ideas

- Removing plagiarism by changing the content

- Offering various content tones

- Changing up to hundreds of words at a time

These factors are some of the commonly provided aspects of a paraphraser. Since a paraphrasing tool is based on advanced AI algorithms, speedy rewriting is one of the chief traits of a paraphrase tool.

Reason To Use Paraphrase Tool As An Assistant

A paraphrasing tool is a software that helps people rewrite content without plagiarizing. It does this by replacing the original text with synonyms, phrases, or a combination of these.

The goal is to make the rewritten content sound like the original text but still have it be different enough so that it won’t get flagged for plagiarism. To help you understand, here are three main reasons to use AI Paraphrasing tools as an assistant:

- Paraphrasing generators are a quick and easy way to rephrase content. They are also useful for plagiarism detection, tone alteration, and rewriting content quickly

- Paraphrasing tools can be used for plagiarism detection by comparing the original text with the paraphrase to check if it is an exact copy of the original

- Paraphrasing tools can help you quickly rewrite content without worrying about plagiarism and tone

Therefore, paraphrasing tools are great for people who want to rewrite their own blog posts or articles but don’t have time to do so. It’s also good for people who want to change the tone of their writing but don’t know how and want an unbiased opinion on how they should do it.

Paraphrasing Text With The Help of Paraphrase Generator

Using a paraphrase generator is a straightforward paraphrasing process. But, to help you understand the right way of using it, we’ve formulated a basic process that every writer can employ. So, let’s get started:

Step One: Pick A Paraphrasing Tool

The first step is to pick a paraphrase tool. But what do you need to look for when finding one? Besides the tool featuring outstanding AI algorithms, it must have a few key features, such as:

- Extensive word-count limit, preferably 1000 and above

- Various supported languages

- Quick rephrasing

- Easy UI design

For demonstration, let’s pick a paraphrase generator by Editpad.org. It has all the key features we’ve just discussed, allowing us to rephrase our content quickly.

Step Two: Identify The Purpose

Now that you have a tool, you need to identify the purpose of rephrasing your content using a paraphraser. In most cases, academic or professional, the common goals include:

- Removing plagiarism

- Changing content tone

- Refreshing content, i.e., making it new/better

- Making content flow better

If your purpose is one of the following four, then you need to keep that in mind from the get-go.

Step Three: Pick Content Tone (If Available)

The third step is to pick a content tone. Granted, not many tools offer those, but some do. Therefore, use it if you have options such as:

- Fluent

- Standard

- Creative

If not, the tool knows which content tone is best for you. So, there might not be a need to pick one. However, if the tool offers it, it’s suggested that you try out each one before you find the one that matches your natural writing tone.

Step Four: Revamp/Rewrite Content

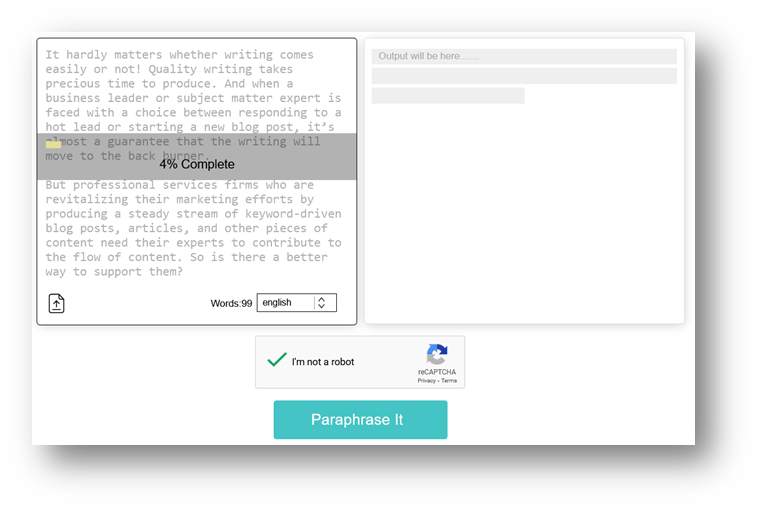

The fourth and main step to paraphrasing text with the help of an AI paraphrasing tool is to paste or upload your content to the tool. Once you do, click on the paraphrasing button. However, some tools might require a captcha check before doing so.

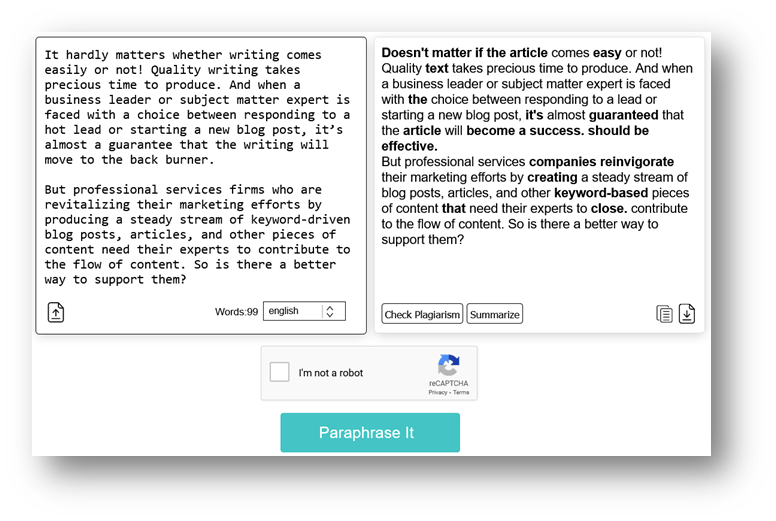

Once the tool begins rewriting, it’ll show you a progress bar, as seen here. When the tool has finished rewriting, this is the outcome you’ll expect:

The content marked in bold is the changed content. You can try to rewrite it once more by copying the rephrased content and pasting it inside the editor. Moreover, you can try additional options like summarizing the rewritten text or checking it for plagiarism.

Step Five: Proofread

The final step you’ll take is to proofread your content. Now, why do you need to proofread if a paraphraser can paraphrase content emphatically? Because:

- It’ll allow you to match your original content tone

- Remove any unnatural-sounding phrases/words

- Find any rare grammatical errors

- Change or remove the text elements you don’t need

Therefore, it’s imperative that you proofread, regardless of how well a paraphrasing tool rewrites your text.

Conclusion

This is the process every writer should employ, regardless of their setting. For both students and pro writers, this approach can help them paraphrase text with an AI tool quite quickly and effectively. Therefore, identify your purpose and paraphrase away.