2142 is a community-driven, sci-fi transmedia created by a team of innovative minds from Belgrade (aka the city that never sleeps) in Serbia. It is a team of seven, including Dusan Zica, Mladen Merdovic, Nenad Krstic, Rade Joksimovic, Darjan Mikicic, Vladimir Pajic, and Dragan Jovanovic, and all have a background in the video game industry. The 2142 project started in May 2022, the world’s first NFT (non-fungible token) webcomic book narrating a super cool sci-fi adventure. To get into the depth of this brilliant idea of NFT comic and the fiction world the team of 2142 is building, Analytics Drift interacted with the CEO and co-founder of 2142, Dusan Zica.

What is 2142?

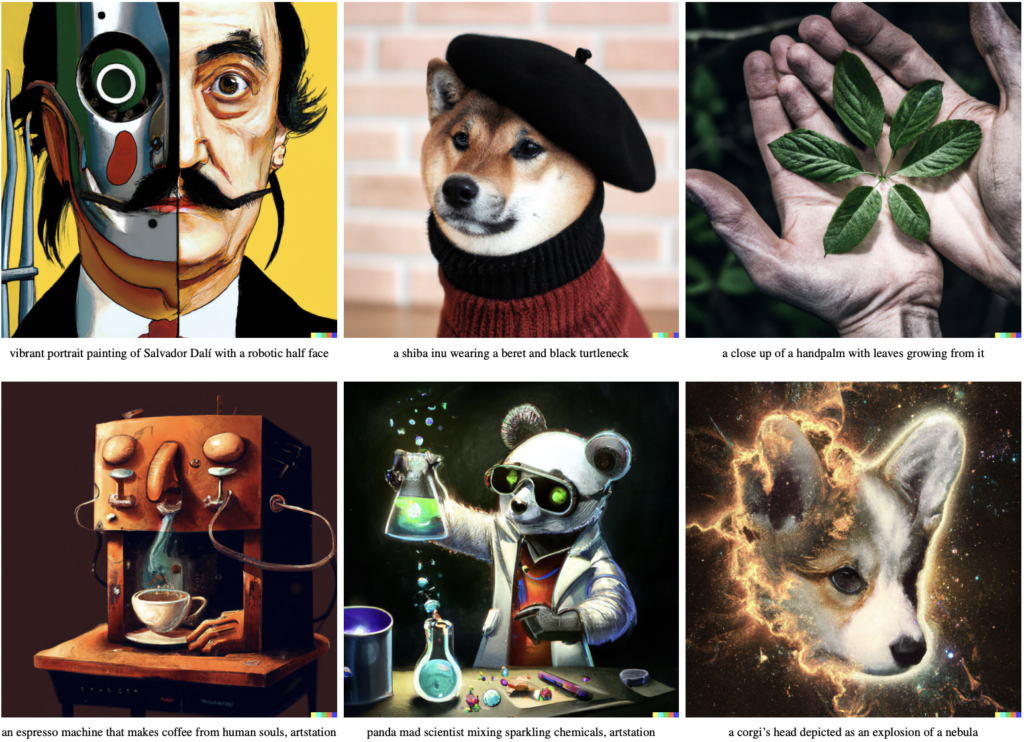

2142 is an NFT project and comic book that might change the world’s perspective on NFTs. According to a report from nonfungible.com, NFT got a vast fandom and marketplace trading going as high as $17.6 billion in 2021. These are sold on cryptocurrency platforms like Gemini, Binance, Metamask, OpenSea, Coinbase, etc. NFTs are unique cryptographic tokens existing on a blockchain that cannot be replicated and can represent real-world items like artwork and real estate. Tokenizing NFT assets makes buying, selling, and trading more efficient than ever, reducing from minimum to zero probability of fraud and looking super cool simultaneously.

Collectors had swamped the NFT marketplace for a while with funky monkey pictures. However, the monkeys might sway other people, Dusan has a different opinion and stated, “I hate today’s NFTs. I hate stupid monkeys with golden cards in their mouth because I truly believe it’s just a matter of hype. It doesn’t have any aesthetical, philosophical, or artistic value, and they don’t make sense to me.” With this thought to provide value and meaning to NFTs, 2142 started building an NFT comic book named “2142” and created an NFT marketplace. The comic’s story arch revolves around the idea that technology and spirituality can go together and not manipulate each other. Additionally, the community of 2142 has around 1500 people who talk about combining science fiction with mythology and cosmic myths based on real astronomical theories.

The beginning of the comic is aligned with the mining of the last Bitcoin in 2140, and in the year 2142 AD, the planet Earth has turned into a polluted dystopian world. The world is high and dry, controlled by corporations and brand sectors in both the real world and the Metaverse, when the long-forgotten secret came out of the legend to give hope.

Here is the synopsis of the comic:

As the world lies in ruins, Metaverse is the opium of

the masses and the last Bitcoin has been mined to

shake the foundations of a known world, three troubled

humans and one strained AI get trapped into hideous

astral conflict at the end of the great cosmic cycle.

Follow them on an epic journey throughout their

spiritual awakening in the physical world, virtual reality,

and astral planes. While, at the same time, the ancient

deities start to awaken, alien demons ravage the Earth,

and AI fights AI for the decentralized liberation of all conscious entities.

Another myth becomes a reality as the mysterious

Satoshi’s wallet activates for the first time to purge the

planet from mega-corps and Metaverse brand sects.

Deep beneath the mortal coil of our suffering planet,

the chant is murmured: “Mother Earth, it is time…”

The creation of 2142 includes borrowing existing things and adding fictional elements. Dusan added, “We decided to use Satoshi Nakomoto, the mysterious maker of bitcoin, as one of our main characters who is going to be practically like an artificial intelligence god in our comic book, tabletop, and RPG.” Dusan also confirmed that 2142 is planning for a video game which is in the pre-production phase and will soon be announced on the website.

How is 2142 an investment?

Apart from the great fiction story one gets to read, when one buys the comic book, they buy NFTs too. There are 21 NFTs in a bundle priced at $30, which is not much for NFTs and comic books. Additionally, collecting the comic book will give you doubles, meaning double pictures, panels, pages, and covers for selling. As 2142 has its own marketplace, the trading of comic books and NFTs is easier and helps buyers regain their investments. “We are not targeting those NFT guys. We’re targeting comic book fans, and we are targeting science fiction fans, and we are targeting video game players and gamers,” Dusan said about their target audience.

Now, there has been a crash in the NFT market this year, resulting in losing the hype of NFTs for the last couple of months, and barely any NFTs are sold. In this phase, 2142 has built a AAA high-quality teaser trailer and preparing to release an animatic trailer. Dusan said, “It’s the worst and the best time to do the NFTs. It’s the worst time if you want to sell them because people are not buying the NFTs right now. It’s not even close to what it was one year ago. And the best time to do NFTs because now, if you make a good NFT project, a long-term project, you can become a leader in a year or two or five.”