Microsoft’s research project Alexandira can parse documents with unsupervised machine learning. It can scale over a billion records to create templates from both structured and unstructured data.

This project was launched in 2014 along with the Cambridge research division, with an intent to construct an entire knowledge base from a set of documents automatically. Alexandria’s technology powers the recently announced Microsoft Viva Topics, which automatically organizes large amounts of data in enterprises. The Alexandria team identifies topics, metadata and employs AI to parse the content of documents in datasets.

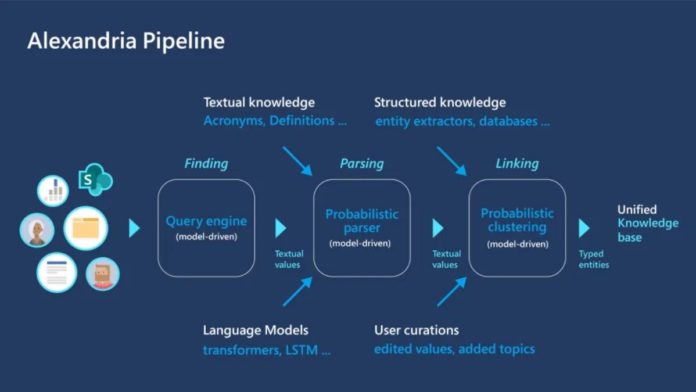

Alexander initially runs a query engine for the required document with its keyphrases or title. The query engine searches millions of records, emails, and ad contexts that relate to the document’s title to extract snippets. All these collected snippets are parsed using the probabilistic phraser, which identifies the parts of text snippets that correspond to specific property values. The model identifies a set of patterns, template such as project {name} will be released on {date}, then matches the template to text, determining which parts of the text correspond with specific property value for further linkage.

Read more: Microsoft Announces The End Of Support For Windows 10 In 2025

In linking, all the duplicate or overlapping entities are identified and united using a clustering process to parse an unsupervised document. According to Alexandria’s lead John Winn, Alexandria merges hundreds or thousands of items to create entries along with a detailed description of the extracted entity.

Alexandria’s program can also help with human-made errors, like incorrect entries or misplacement of information. During the linking process, the model can even analyze knowledge from other sources where it wasn’t originally mined. Irrespective of the source, all the knowledge is linked together to provide a single unified knowledge base. During this cross-checking process, the human entity errors can be fished out and corrected.

In the coming years, Alexandria is focussing on creating a tailored schema to meet the needs of each organization that would allow employees to find all events of a specific type along with the place and date of their occurrence. The project team would also be working towards developing a knowledge base that understands what a user is trying to achieve and automatically provides relevant information to help them achieve it.