A microresonator-based frequency comb or microcomb is a photonic device that generates many optical frequencies on a tiny cavity known as a microresonator. Microcomb can be used to measure/generate frequencies with extreme precision since the colors are uniformly distributed. Microwomb has a wide range of applications since most measurements can be linked to frequency. A single photonic chip can replace tens of lasers, thereby decreasing power consumption in optical communication channels. They are also utilized in the calibration of spectrographs in astronomical observatories and might help discover exoplanets.

However, quantum microcombs architectures built on probabilistic quantum states are not scalable without quantum memory. XuYi is an assistant professor of electrical and computer engineering at the University of Virginia School of Engineering and Applied Science. In his recent research, he and his group demonstrated a deterministic, two-mode-squeezed quantum frequency comb in a silica microresonator on a silicon chip.

Nature Communications published XuYi’s paper A squeezed quantum microcomb on a chip. The paper’s co-first authors are Mandana Jahanbozorgi, a Ph.D. student of electrical and computer engineering, and Zijiao Yang, a Ph.D. student in physics. Hansuek Lee, assistant professor at the Korean Advanced Institute of Science and Technology, and Olivier Pfister, professor of quantum optics and quantum information at UVA, also contributed to the research’s success.

Read more: Volkswagen Develops New Applications of Automotive Quantum Computing

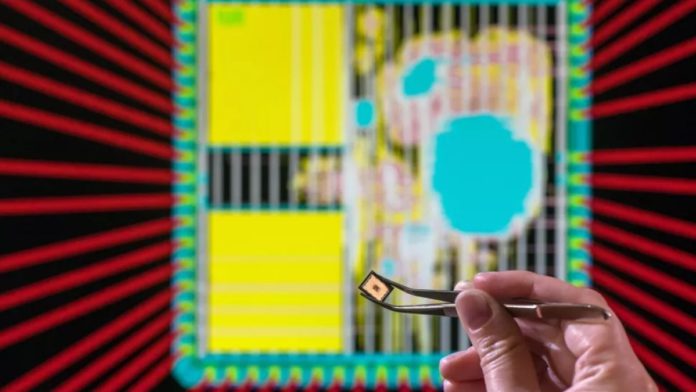

XuYi’s research group has created a scalable quantum computing platform on a photonic chip the size of a penny. The photonics-based squeezed quantum microcomb can drastically reduce the number of devices needed to achieve quantum speed.

“The future of the field is integrated quantum optics,” Pfister said. “Only by transferring quantum optics experiments from protected optics labs to field-compatible photonic chips will bona fide quantum technology be able to see the light of day. We are extremely fortunate to have been able to attract to UVA a world expert in quantum photonics such as Xu Yi, and I’m very excited by the perspectives these new results open to us.”

Yi’s photonics-based squeezed quantum microcomb is attractive because each light wave has the potential to become a quantum unit. He carried the multiplexing concepts of optical fibers into the quantum realm. Yi’s research group created a ring-shaped, millimeter-sized quantum source that efficiently converts photons from single to multiple wavelengths. His team verified the generation of 40 qumodes (fundamental information-carrying units of CV quantum computers) in the form of 20 two-mode squeezed comb pairs from a single microresonator on a chip, demonstrating that optical multiplexing of quantum modes can work in integrated photonic platforms. The number of measured qumodes was 40 due to the limit span of local oscillators. “We estimate that when we optimize the system, we can generate thousands of qumodes from a single device,” Yi said.

The above figure depicts the experimental setup of Yi’s team. They used a continuous-wave (CW) laser for driving both squeezed microcomb and the local oscillators. All the experiment data is available here.

Yi’s photonics-based system offers two benefits in opening a path of quantum computing for real-world conditions. Firstly, unlike quantum computing platforms that require cryogenic temperatures for cooling superconducting electronic circuits, photonic integrated chips can sleep/run at room temperature since photos have no mass. Secondly, Hansuek Lee used a silicon chip for fabricating the microresonator, implying that the quantum source can be mass-produced.