Online cryptocurrency trading platform Coinbase announces that it has invested $150 million in Indian startups operating in the Web3 and crypto industries, along with its plans to hire more than 1000 new employees in India this year.

This is a step toward the company’s goal of expanding its operations in India, which has the most number of crypto owners. To date, Coinbase has invested in two unicorn Indian startups in the crypto space, namely Coinswitch Kuber and CoinDCX.

Coinbase’s Indian tech hub opened last year and has already employed over 300 people throughout the country. With the addition of 1000 more employees, Coinbase will be able to accelerate the growth of the Indian crypto market considerably.

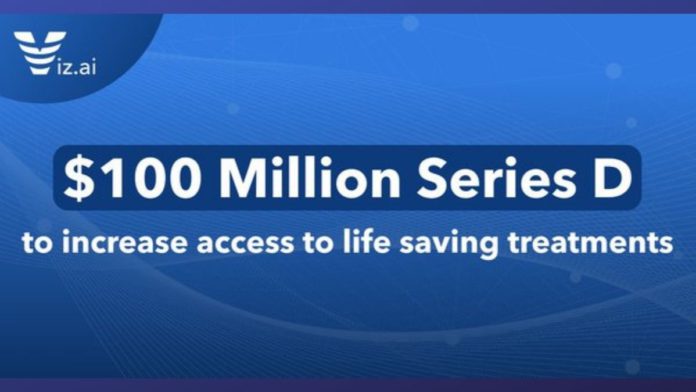

Read More: Viz.ai raises $100 million in Series D Funding Round

“India has built a robust identity and digital payments infrastructure and implemented it at rapid scale and speed. Combined with India’s world-class software talent, we believe that crypto and web3 technology can help accelerate India’s economic and financial inclusion goals,” mentioned Coinbase in a blog.

Last week, Coinbase Ventures announced a partnership with Builders Tribe to launch and organize a startup pitch event for India’s Web3 startups on April 8th.

The announcement comes at a time when the country’s government is yet to decide the fate of cryptocurrencies and blockchain technology in India.

According to recent reports, India’s Government is currently drafting a list of frequently asked questions to provide more clarity on the Government’s take on income tax and GST for virtual assets.

“FAQ on taxation of cryptocurrency and virtual digital assets is in works. Although FAQs are for information purposes and do not have legal sanctity, the law ministry’s opinion is being sought to ensure that there is no loophole,” a government official told PTI.

Moreover, the matter of concern for investors is that various digital Wallets which support Crypto trading, such as MobiKwik, have suspended their support for crypto transactions. Coinbase, which launched UPI support for crypto exchanges, has also taken down the feature just three days after the feature went live.

/cdn.vox-cdn.com/uploads/chorus_image/image/70716637/DALL_E_Teddy_bears_mixing_sparkling_chemicals_as_mad_scientists__steampunk.0.png)