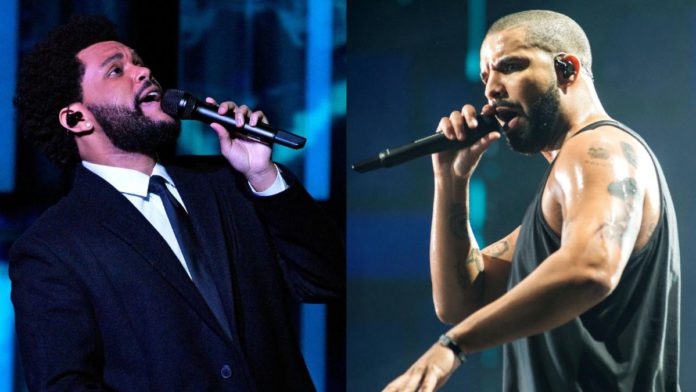

Universal Music Group (UMG) has removed a song with AI-generated vocals from streaming sites that falsely claimed to be from Drake and the Weeknd after it went viral. The song, Heart on My Sleeve, was denounced by the record company for “infringing content created with generative AI“.

The song was first uploaded under the artist name Ghostwriter on streaming sites after being posted on TikTok by a user going by the handle Ghostwriter977. It had 600,000 Spotify streams, 15 million TikTok views, and 275,000 YouTube views by the time it was taken down yesterday afternoon.

The viral postings, according to UMG, show why platforms have a fundamental moral and legal obligation to stop people from abusing their services and protect artists from harm. UMG made this statement to Billboard magazine.

Read More: How Students Can Make The Best Use Of Technology To Enhance Learning Capacities

UMG declined to say whether it had formally requested takedowns from social media platforms and streaming providers. UMG also urged streaming platforms to stop AI companies from accessing the label’s songs.

The Financial Times quoted UMG saying that it had learned some services had been trained on copyrighted music “without obtaining the required consents” and warned the platforms that it will not hesitate to take steps to protect rights of their artists.