Announced at the SIGGRAPH conference, NVIDIA introduces cutting-edge technology for creating high-fidelity real-time 3D modeling for autonomous systems and climate research.

At SIGGRAPH 2024, NVIDIA introduced fVDB, an advanced deep-learning framework designed to construct incredibly detailed and expansive AI-ready virtual representations of the real world. Built upon the foundation of OpenVDB, an industry-standard library for simulating and rendering complex volumetric data, fVDB has taken a significant leap forward in 3D generative modeling.

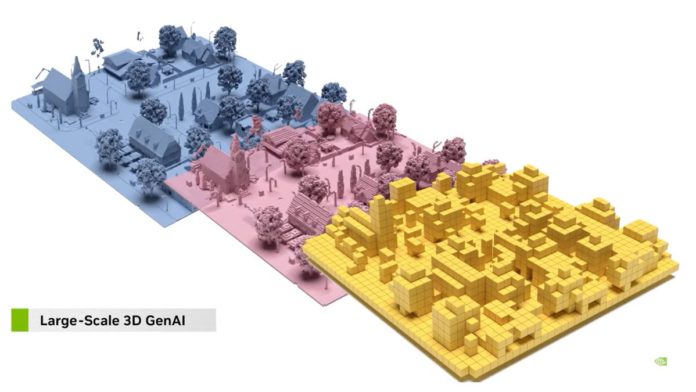

This innovation has opened new doors for industries relying on accurate digital models to train their generative physical AI for spatial intelligence. fVDB effectively converts raw environmental data collected by LiDAR and neural radiance fields (NeRFs) into large-scale virtual replicas that can be rendered in real-time.

With applications spanning autonomous vehicles, optimizing urban city infrastructure, and disaster management, fVDB has a crucial role in transforming robotics and advanced scientific research.

NVIDIA’s research team put in tremendous effort to develop fVDB. This framework is already being used to power high-precision models of complex real-world environments for NVIDIA Research, DRIVE, and Omniverse projects.

fVDB facilitates high-performance deep learning applications by integrating NVIDIA-powered AI operators, including convolution, pooling, and meshing into NanoVDB, a GPU-optimized data structure for 3D simulations. This enables the development of sophisticated neural networks tailored for spatial intelligence tasks, such as large-scale point cloud reconstruction and 3D generative modeling.

Key features of fVDB include:

- Larger Scale: It can handle four times larger environments than previous frameworks.

- Faster Performance: fVDB achieves 3.5 times faster processing speeds than its predecessors.

- Interoperability: The framework seamlessly handles massive real-world datasets, converting VDB files into full-sized 3D environments.

- Enhanced Functionality: With ten times more operators than previous frameworks, fVDB simplifies processes that once required multiple deep-learning libraries.

Read more: Harnessing the Future: The Intersection of AI and Online Visibility.

NVIDIA is committed to making fVDB accessible to a wide range of users. The framework will soon be available as NVIDIA NIM inference microservices, enabling seamless integration into OpenUSD workflows and the NVIDIA Omniverse platform.

The upcoming microservices include:

- fVDB Mesh Generation NIM: For generating digital 3D environments of the real world.

- fVDB NeRF-XL NIM: To create large-scale NeRFs within the OpenUSD framework using Omniverse Cloud APIs.

- fVDB Physics Super-Res NIM: It will be useful in performing super-resolution to create high-resolution physics simulations using OpenUSD.

These microservices will be crucial for generating AI-compatible OpenUSD geometry within the NVIDIA Omniverse platform, which is designed for industrial digitalization and generative physical AI applications.

NVIDIA’s commitment to advancing the OpenVDB is evident through its efforts to enhance this open-source library. In 2020, the company introduced NanoVDB and provided GPU support to OpenVDB, boosting performance and simplifying development. This paved the way for real-time simulation and rendering.

In 2022, NVIDIA launched NeuralVDB, which expanded NanoVDB’s capabilities by incorporating ML to compress the memory footprint of VDB volumes by up to 100 times. The addition allowed developers, creators, and other users to interact comfortably with extremely large datasets.

NVIDIA is making fVDB available through an early access program for its PyTorch extension. It will also be integrated into the OpenVDB GitHub repository, ensuring easy accessibility to this unique technology.

To better understand fVDB and its potential impact, watch NVIDIA’s founder and CEO, Jensen Huang’s fireside chats at SIGGRAPH. These videos provide further insights into how accelerated computing and generative AI drive innovation and create new opportunities across industries.