Facebook AI open-sources two new datasets to help developers build more sophisticated conversational AI systems. The new datasets are targeted toward making the AI system accessible to non-English speaking communities too. Conversational AI systems use current deep neural models trained on massive data to understand complicated requests in English. Whereas the training data available in less widely spoken languages are very limited. Facebook AI claims that their method can overcome the limitation by using 10 times less training data to develop conversational AI that can perform complex tasks.

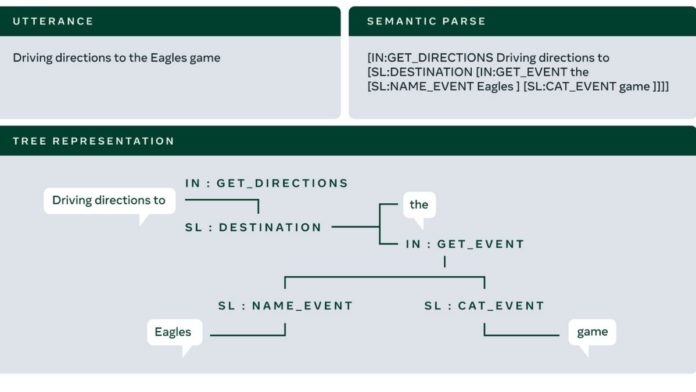

Classic NLU models take a straightforward approach to parsing any question. For example, when the model is given the command of “Will it rain in Bangalore?” It initially matches the primary intent of “GET_WEATHER” to the set predefined intent labels and then identifies the required slots for that intent, here it tags Bangalore as the LOCATION slot. More complex commands require a much more sophisticated approach, and models should possess large amounts of domain-specific labeled training examples for every task.

“We enhance NLU models to support a more diverse set of domains without having to rely on manually annotated training data,” mentioned Facebook AI in their blog. They also added that their method could generate task-oriented semantic parsers for new domains with 25 training samples per intent or slot label. Initially, pretrained transformer models, such as BART are employed. These models are not compatible for fine-tuning with few training samples. Hence, these models are trained with meta-learning to improve generalization, making it easier to fine-tune the target domains with little training data.

To improve the model accuracy even with low resources, Facebook implements a technique called low-rank adaptive label smoothing (LORAS) to exploit the latent structure in the label space of the NLU task. To achieve similar performance as high resources, meta-learning and LORAS techniques are implemented on TOPv2a, which is a new multi-domain NLU dataset with 8 domains and over 180,000 annotated samples. Scaling NLU models to new languages are tricky and time-consuming, as it typically involves building large annotated data sets.

To simplify this process, Facebook employed multilingual NLU models to transfer their learnings from large data languages to other languages with less data while using pretrained multilingual Transformer models, such as XLM-R, mBART, CRISS, and MARGE, as the building blocks to the NLU model.

Read more: Facebook AI Open Sources A New Data Augmentation Library

From the experiments conducted by Facebook, the shared multilingual NLU model for multiple languages shows significant performance improvement compared with the per-language model, enabling faster language scale-up. Through machine translation, other fields such as translate-align data augmentation and proposed a distant supervision technique were also explored to build models that generalize well without using any training data in the target language.

According to the blog, their zero-shot models, on average, achieve an error rate that approaches their best in-language NLU models. To train models in non-English, Facebook released the MTOP dataset, a multilingual task-oriented parsing data set with 100,000 utterances across 6 languages, 11 domains, and 117 intent types.

The introduction of the new datasets paves the way for other researchers and developers worldwide to build conversational AI systems that aren’t restricted by languages. Facebook’s AI Chief Scientist, Yann LeCun, noted that the future of AI research is in creating more intelligent generalist models that can acquire new skills across different tasks, domains, and languages without massive amounts of labeled data. This is more significant in conversational AI, where the primary job of the systems is to understand all types of users and serve all kinds of needs.

Facebook also mentioned in the blog that it has long-term commitments to building similar systems as the world is too varied and diverse to be understood by machines that were trained only on manually curated and labeled examples. Technical innovations, such as new model architectures, are only one part of ensuring that AI systems are fair. Hence, Facebook AI has committed to developing AI responsibly, which also requires developing new ways to measure fairness, new technical toolkits, and ongoing, open dialogue with outside experts, policymakers, and others.