In collaboration with 33 academic labs, Deepmind has collectively gathered data from 22 diverse robot types to establish the Open X-Embodiment dataset and the RT-X model. This marks a significant advancement in the era of robotics, aligning with the goal of training robots for a wide range of tasks.

Deepmind’s recent initiative has resulted in the creation of an enormous dataset known as the Open X-Embodiment dataset. This dataset comprises data gathered from distinct robot types, and these robots have successfully executed 500 different skills and completed over 150,000 different tasks across more than a million episodes.

In this work, the team mentioned when a single model is trained using data from different robot types, and it performs much better across a variety of robots compared to models trained separately for each robot type.

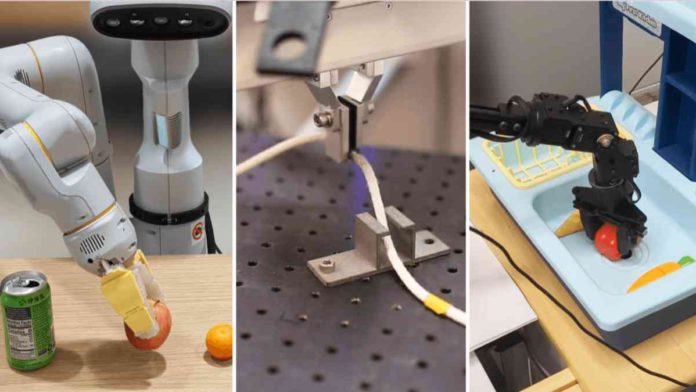

The image below has some examples from the Open X-Embodiment dataset showcasing over 500 skills and 150,000 tasks performed by robots.

Read More: DeepMind Announces SynthID Tool to Watermark AI-generated Images

RT-X model, a general-purpose robotics model, is built on two of Deepmind’s transformer models. The first one, RT-1-X, is trained on RT-1, a multi-task model designed to tokenize robot inputs and translate them into actions, including motor commands, camera images, or task instructions. This approach enhances real-time control capabilities, making it suitable for real-world robotic control on a large scale.

The other, RT-2-X, is trained using RT-2, a vision-language-action (VLA) model that leverages data from both web sources and robotics. This model excels in translating acquired knowledge into generalized instructions that can be further applied for controlling robotics in various scenarios.

The team has open-sourced this dataset and the trained models, allowing other researchers to further develop and expand upon this work.