Researchers at Stevens Institute of Technology have taught an AI system to model first impressions and utilize facial images to correctly forecast how individuals will be perceived, in collaboration with Princeton University and the University of Chicago. Their work, which was published in PNAS, introduces a neural network-based model that can predict with surprising precision the arbitrary judgments people would make about individual photos of faces.

When two individuals meet, they make quick judgments about anything from the other person’s age to their IQ or trustworthiness based purely on how they appear. First impressions could be tremendously powerful, even though they are frequently erroneous, in shaping our relationships and influencing anything from hiring to criminal punishment. There is also enough psychological research that backs the notion that such judgments often make us biased affecting decision-making and thought processes.

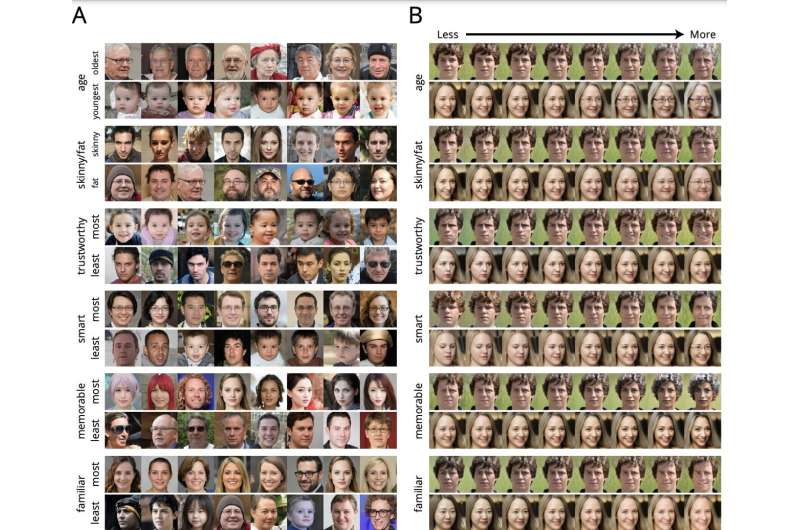

Thousands of individuals were asked to score over 1,000 computer-generated photographs of faces based on qualities such as how intelligent, electable, religious, trustworthy, or outgoing the subject of the photograph appeared to be. The data was then used to train a neural network to make similar quick decisions about people based merely on images of their faces. Jordan W. Suchow, a cognitive scientist and AI expert at Stevens School of Business, led the team, which also comprised Princeton’s Joshua Peterson and Thomas Griffiths, and Chicago Booth’s Stefan Uddenberg and Alex Todorov.

In recent years, computer scientists have created a variety of complex machine learning models that can analyze and categorize vast quantities of data, accurately anticipate certain occurrences, and generate images, audio recordings, and texts. However, in reviewing past research on human facial expressions based judgments, Peterson and his colleagues observed that relatively few studies used state-of-the-art machine learning technologies to investigate this area. According to Suchow, the team combines this with human facial expression assessments and employs machine learning to investigate people’s biased first impressions of one another.

According to Suchow, the team can use this algorithm to predict what people’s first impressions of you would be and which preconceptions they will project onto you when they see your face, using just a photo of your face.

Many of the algorithm’s observations correspond to common intuitions or cultural assumptions, e.g., persons who smile are perceived as more trustworthy, while people who wear glasses are perceived as more intelligent. In addition, it’s difficult to explain why the algorithm assigns a certain feature to a person in other instances.

Suchow clarifies that the algorithm does not provide targeted feedback or explain why a certain image elicits a specific opinion. However, it can assist us in comprehending how we are seen. “We could rank a series of photos according to which one makes you look most trustworthy, for instance, allowing you to make choices about how you present yourself,” says Suchow.

The new algorithm, which was created to assist psychology researchers in creating face images for use in trials on perception and social cognition, might have real-world applications. The team pointed out that generally people carefully construct their public personas, for example, posting only photos that they believe make them appear bright, confident, or attractive, and it’s simple to see how the algorithm could help with that. They noted there is already a societal norm around portraying yourself in a favorable way, resulting in avoiding some of the ethical issues surrounding the technology.

On the malicious side, the algorithm can be used to manipulate images to make its subjects seem in a certain manner, such as making a political candidate appear more trustworthy or their opponent appear stupid or suspicious. While AI techniques are already being used to make “deepfake” films depicting events that never occurred, the team fears their new algorithm might discreetly alter real images to influence the viewer’s perception of their subjects.

Read More: Clearview AI to Build a Database of 100 billion facial Images: Should we be worried?

Therefore to ensure the neural-network-based algorithm isn’t misused, the research team has obtained a patent to protect its technology and is currently forming a startup to license the algorithm for pre-approved ethical objectives.

While the current algorithm focuses on average responses to a particular face over a wide group of viewers, the research team intends to build an algorithm that can anticipate how a single person will react to another person’s face in the future. This might provide significantly more insight into how quick judgments impact our social interactions, as well as potentially aid people in recognizing and considering alternatives to their first impressions when making crucial decisions.