Researchers at Edge Impulse, a platform that builds ML models for the edge, have created a novel machine learning solution that enables real-time object detection on devices with limited CPU and storage capacity. The solution is called Faster Objects, More Objects (FOMO), a novel deep learning architecture having the potential to open up new computer vision applications.

While cloud-based deep learning solutions have witnessed tremendous acceptance, it isn’t suitable for every circumstance as many apps need inference on the device. For example, internet connectivity is not assured in some situations, such as drone rescue operations. Besides applications that require real-time machine learning inference, the delay induced by the roundtrip to the cloud can prove risky. Hence edge devices promise to resolve the latency issues faced with the cloud. However existing traditional deep learning algorithms may fail to work on resource-constrained edge devices.

TinyML is an approach for enhancing machine learning models for resource-constrained embedded devices. These embedded devices are powered by batteries and have minimal computing power and memory. Models are transformed and optimized for use on the smallest unit of an electrical device, the microcontroller, using TinyML as traditional machine learning models will not work on these devices.

The ability to run a deep learning model on such a small device contributes significantly to the democratization of artificial intelligence. Edge computing devices have been created to fulfill the requirement for computational capacity closer to endpoints as a result of the internet of things (IoT). Even if there is no connectivity, TinyML machine learning algorithms can be applied to small, low-power devices that lie at the end of an IoT network. It assures that devices can process data in real-time, right where it is produced, and that they can identify and respond to problems in real-time, independent of both latency and bandwidth. Data processing at the source of data generation also secures it from hackers who target centralized data stores.

In hindsight, making TinyML more available to developers will be crucial in the future for turning waste data into meaningful insights and developing innovative applications across a variety of sectors.

The memory and processing requirements of most object-detection deep learning models exceed the capabilities of tiny CPUs. On the other hand, FOMO only takes a few hundred kilobytes of memory, making it ideal for TinyML.

FOMO is based on the premise that not all object-detection applications need the high-precision output that state-of-the-art deep learning models can give. Users can scale deep learning models to very small sizes while making them effective by identifying the most appropriate tradeoff between accuracy, performance, and memory.

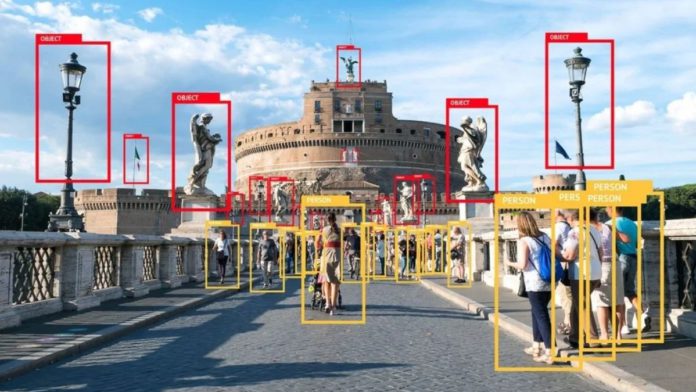

This is a remarkable milestone because, unlike image classification, which focuses on predicting the existence of a certain type of item in an image, object classification requires the model to recognize more than one object as well as the bounding box of each occurrence. As a result, object recognition models are far more challenging than image classification deep learning neural networks and require considerable processing power.

FOMO anticipates the object’s center rather than bounding boxes. This is due to the fact that many object identification programs are only concerned with the placement of items in the frame rather than their sizes. Detecting centroids is substantially more computationally efficient than bounding box prediction and requires fewer data.

FOMO also boasts of structural upgrades compared to conventional deep learning architectures. The team explains that single-shot object detectors are comprised of many fully-connected layers that predict the bounding box and a collection of convolutional layers that extract features. The convolution layers in a neural network work in a hierarchical order to extract visual features. The first layer identifies basic objects in various directions, such as lines and edges. Each convolutional layer is frequently paired with a pooling layer, which reduces the output of the layer while keeping the most important features in each area.

After that, the output of the pooling layer is passed into the next convolutional layer, which extracts higher-level features like corners, arcs, and circles. The feature maps zoom out and can recognize intricate things like faces and objects when more convolutional and pooling layers are added. Finally, the fully connected layers flatten the final convolution layer’s output and attempt to forecast object class and bounding box.

Read More: MIT researchers develop an AI model that understands object relationships

In the case of FOMO, the fully connected layers and the last few convolution layers are dropped. This reduces the neural network’s output to a smaller version of the original image, with each output value representing a tiny patch of the original image. After that, the network is trained using a unique loss function such that each output unit can predict the class probabilities for every corresponding patch in the input picture. The result is essentially a heatmap for different categories of objects.

This approach is different from pruning, a popular type of optimization algorithm that compresses neural networks by omitting parameters that are not relevant to the model’s output.

FOMO’s architecture offers a number of major advantages. For starters, FOMO works with existing architectures like MobileNetV2, a popular deep learning model for image classification on edge devices.

FOMO also reduces the memory and compute needs of object detection models by dramatically reducing the size of the neural network. It is 30 times quicker than MobileNet SSD and can run on devices with less than 200 kilobytes of RAM, according to Edge Impulse.

There are certain bottlenecks that also need to be taken care of. For instance, FOMO succeeds if objects are of the same size. However, this may not be the case if there is one very large object in the foreground and many small objects in the background. Additionally, if objects are too close together or overlap, they will occupy the same grid square, lowering the object detector’s accuracy. Though, to some extent, you may get around this limitation by decreasing FOMO’s cell size or boosting the image resolution. The team at Edge Impulse claim FOMO is very effective when the camera is in a fixed place, such as scanning products on a conveyor belt or counting automobiles in a parking lot.

At present, the Edge Impulse team hopes to improve on their work in the future, such as downsizing the model to under 100 kilobytes and improving its transfer learning capabilities.