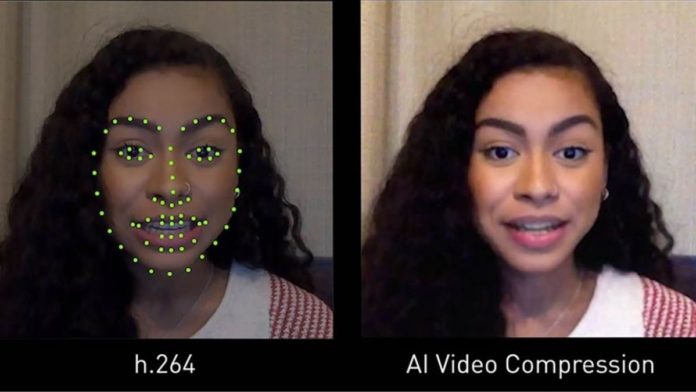

Nvidia announced Vid2Vid Cameo, an artificial intelligence model that uses generative adversarial networks (GANs) to generate realistic talking-heads in video conferencing from a single 2D image. The AI extracts the key points from the first frame in the video as a 2D photo and later uses its unsupervised learning method to collect 3D key points.

Vid2Vid Cameo was demonstrated for the first time in October 2020. It was fabricated for video conferences, and all it requires is only a single picture and a video stream that dictates the person’s animation. The AI model automatically identifies 20 key points to encode the face structure. The GAN on the receiver’s end taps the information from the keypoints and generates a video to impersonate the appearance of the 2D picture into a 3D one.

A GAN is a two-part model that consists of a generator that creates the samples and a discriminator that differentiates between real-world samples and the generated samples to demonstrate impressive feats of media synthesis. According to Nvidia, the high-performance GANs can create realistic portraits of people and objects that don’t exist.

Read more: NVIDIA Canvas Uses Artificial Intelligence To Turn Your Doodles Into Images

Vid2Vid Cameo develops the 3D talk head in 1/10th of the fraction of bandwidth initially used for video conferencing. Rather than streaming the entire screen of pixels, the model analyzes the facial points of every person on a call and then algorithmically reanimates the face in the video on the receiver’s end.

Nvidia announced that Vid2Vid Cameo would be available on Nvidia Video Codec SDK and Nvidia Maxine SDK as AI Face Codec to achieve exceptional performance that was done using 180,000 high-quality videos as the training dataset.