When humans see, they understand the scene by relating with objects. However, deep learning models struggle to understand the entangled relationships between individual objects. Without knowledge of object relationships, a robot that’s supposed to help someone in a kitchen would have difficulty following complex commands like “pick up the spatula that is to the left of the stove and place it on top of the cutting board.” MIT researchers have developed an AI model that understands object relationships to solve this problem. The model works by representing individual relationships and then combining them to describe the overall scene, enabling the model to generate more accurate actions.

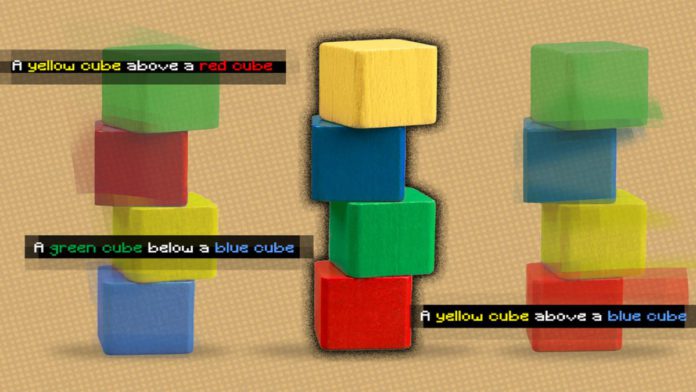

The framework generates an image of a scene based on a text description of objects and their relationships. Next, the system would break these sentences down into smaller pieces to describe each relationship. It then combines the smaller relationships through an optimization process that generates an image of the scene. In addition, breaking sentences allows the system to recombine shorter pieces in various ways, making it better to adapt to new scene descriptions.

“When I look at a table, I can’t say that there is an object at XYZ location. Our minds don’t work like that. In our minds, when we understand a scene, we really understand it based on the relationships between the objects. We think that by building a system that can understand the relationships between objects, we could use that system to more effectively manipulate and change our environments,” says Yilun Du, a Ph.D. student in the Computer Science and Artificial Intelligence Laboratory (CSAIL) and co-lead author of the paper.

Read more: OpenAI’s GPT-3 is now Open for All

Other co-lead authors on the paper were Shuang Li, a CSAIL Ph.D. student, and Nan Liu, a graduate student at the University of Illinois; Joshua B. Tenenbaum, Professor of Cognitive Science and Computation; and senior author Antonio Torralba, the Delta Electronics Professor of Electrical Engineering and Computer Science. In December, they will present the research in a paper titled Learning to Compose Visual Relations at the Conference on Neural Information Processing Systems.

The researchers used energy-based models, a machine-learning technique to represent the individual object relationships in a scene description. The system also works in reverse, finding text descriptions when given an image that matches the relationships between objects in the scene. In addition, their model can edit an image by rearranging the objects in the scene to fit a new description.

The MIT researcher’s model outperformed the baselines compared to other deep learning methods that were given text descriptions and tasked with generating images that displayed the corresponding objects and their relationships. They also asked humans to evaluate whether the generated images matched the original scene description, and 91 percent of participants concluded that the new model performed better.

This research is helpful in situations where industrial robots perform multi-step manipulation tasks, like assembling appliances or stacking items in a warehouse. Li also added that their model can learn from less data but can generalize to more complex scenes. The researchers would like to see how their model performs on complex real-world images with noisy backgrounds and objects blocking one another.