The Singapore Ministry of Health and Ministry of Education launched the Mindline at Work tool in August 2022 for public-sector teachers as part of a mental health initiative. A chatbot component was trialed during development. However, anxious and burned-out teachers coming to the portal for help were met with comments like: “Remember, our thoughts are not always helpful. If a family member or friend was in your place, would they also see it the same way?” Unsettling screenshots of the chatbot’s interactions went viral on social media platforms.

Wysa

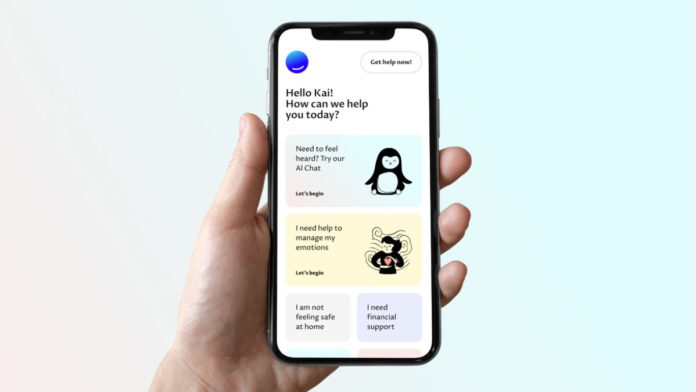

As part of the mental health initiative, the Singaporean government partnered with Wysa, which is a well-known name in the AI therapy app sector. Wysa is recognized for having one of the most substantial evidence bases among several such apps and is clinically recommended by expert groups such as the Organisation for the Review of Care and Health Apps.

Read More: Indian Startup Fluid AI Introduces First Book Written By AI Algorithms

Despite that, in an investigation by Rest of World, users described Mindline at Work as a one-size-fits-all software that struggles to meet the specific needs of the teachers. More generally, psychology experts warn that partnering with digital therapy or wellness apps can backfire when the leading causes of mental health issues, in this case at workplaces, remain unaddressed.

Singapore Government’s Mental Health Initiative

Singapore’s government is the first to bring Wysa’s therapy bot into a national-level service. The original Mindline.sg initiative, which was launched in June 2020, was aimed to help anyone in Singapore access care during the pandemic. The platform was integrated four months later as an “emotionally intelligent” listener by the Ministry of Health’s Office for Healthcare Transformation, Singapore. Later on, when teacher burnout during the pandemic became a prominent news topic, an extension, Midline at Work, was rolled out for public education professionals as a more tailored version.

Midline at Work’s Generic Advice

Complaints began to emerge only a few days after the launch of the extension. Upset users were unsatisfied with the chatbot’s generic advice. They said it is not the right tool to address the root causes of teachers’ stress, including uncapped working hours, demanding performance appraisal systems, and large classroom sizes.

“It’s pretty useless from the teacher’s point of view,” said a public school teacher in his late 20s. He said that no one he knows takes the Midline bot seriously. “It’s a joke. It is trying to gaslight the teachers into saying, ‘Oh, this kind of workload is normal. Let’s see how you can reframe your perspective on this,'” he said.

Experts Take

In response to the backlash, Sarah Baldry, Wysa’s vice president of marketing, said that the app helps its users to build emotional resilience. “It is important to understand that the chatbot can’t change the behavior of others. Wysa can only help users change the way they feel about things themselves.”

Some research shows that AI apps do promise to alleviate symptoms of anxiety and depression, although a significant issue is that most of them are not evidence-based. Other barriers that make AI bot therapy unpopular are concerns about data privacy and low engagement. Being the founder of the mental health collective Your Head Lah, Reetaza Chatterjee works in the nonprofit sector. “I don’t trust that these apps wouldn’t share my confidential information and data with my employers,” she said.

Overall, privacy remains a weak point. Mozilla Foundation, a digital rights nonprofit, found that many mental health apps majorly fail at it. However, Mozilla noted that Wysa was a strong exception in this case, though users who commented on the matter hadn’t used the platform enough to think about it.

Conclusion

Although artificial intelligence is drastically transforming the healthcare industry, particularly the mental health sector, it is evident from the example of Midline at Work that it cannot be completely relied upon, and doing so can prove disadvantageous. AI still has a long way to go before it can genuinely achieve human intelligence. Until then, we can make the most of what the technology has to offer to radicalize mental health scenarios while keeping an eye out for any irregularities and abnormalities.