With the help of AI, several fields have made some amazing progress. Existing AI algorithms have greatly benefited disciplines like data analytics, large language models, and others that use enormous amounts of data to detect patterns, learn rules, and then apply them. The foundational idea behind AI is to replicate the functioning of the human brain using arithmetic and digital representations. In other words, while the human brain relies on spiking signals sent across neuron synapses, AI processes data by carrying matrix multiplications. In addition, unlike human neurons, AI models require weeks of training, consume huge power, and are powered by silicon-based chips that are currently hit with a scarcity of resources in the semiconductor industry. Therefore, scientists turned to neuromorphic computing to solve these gnawing concerns.

In essence, neuromorphic computing is the revolutionary concept of designing computer systems that can resemble the brain’s neurological structure. A neuromorphic chip like Intel’s Loihi 2 attempts to simulate the real-time, stimulus-based learning that occurs in brains. Since existing AI models are bound by computational, literal interpretations of events, it is crucial that the next generation of AI should be able to respond quickly to unusual circumstances as the human brain would. Because of how unpredictable and dynamic the world is, AI must be able to deal with any peculiar circumstances that may arise in real-time.

The emergence of neuromorphic computing has prompted major endeavors to design new, nontraditional computational systems based on recurrent neural networks, which are critical to enabling a wide range of modern technological applications such as pattern recognition and autonomous driving.

Most of the existing chip architectures adopt von Neumann architecture, which means that the network uses independent memory and processing units. Currently, data is transferred between computers by being retrieved from memory, moved to the processing unit, processed there, and then returned to memory. This constant back and forth drain both time and energy. When processing massive datasets, the bottleneck it produces is further accentuated.

Despite using less than 20 watts of electricity, human brains still beat supercomputers, proving their superior energy efficiency. By creating artificial neural systems with “neurons” (the actual nodes that process information) and “synapses” (the connections between those nodes), neuromorphic computing can replicate the function and efficiency of the brain. This AI neural network version of our neural network of synapses is called spiking neural networks (SNN), which are arranged in layers, with each spiking neuron able to fire independently and interact with the others. This allows artificial neurons to respond to inputs by initiating a series of changes. This allows researchers to alter the amount of electricity that passes between those nodes to simulate the various intensities of brain impulses.

However, this is a major setback: spiking neural networks are limited in their ability to freely select the resolution of the data they must keep or the times they access it during calculations. They can be thought of as non-linear filters that process data as it passes through them in real-time. These networks need to keep a short-term memory record of their most recent inputs to do real-time processing on a sensory input stream. Without learning, the lives of these memory traces are fundamentally constrained by the network size and the longest time scales that can be handled by the network’s parts. Therefore, developing volatile memory technologies that use fading memory traces is the need of the hour.

Since liquid environments are also necessary for biological neurons, a convergence might be reached by applying nanoscale nonlinear fluid dynamics to neuromorphic computing.

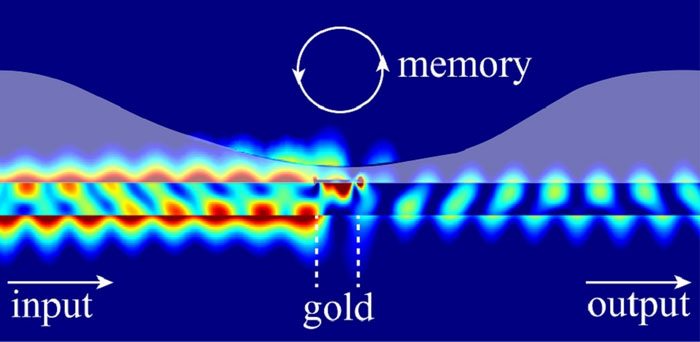

University of California San Diego researchers have created a unique paradigm in which liquids that ordinarily do not interact with light significantly on a micro- or nanoscale, support a sizable nonlinear response to optical fields. According to research published in Advanced Photonics, a nanoscale gold patch that serves as an optical heater and causes variations in the thickness of a liquid layer covering the waveguide would provide a significant light-liquid interaction effect.

Researchers explain that here liquid film serves as an optical memory. It operates as follows: There is a mutual interaction between the optical mode and the liquid film when a light in the waveguide modifies the geometry of the liquid surface and changes the liquid surface’s form to impact the waveguide’s optical mode’s characteristics. Notably, when the liquid geometry changes, the optical mode’s characteristics experience a nonlinear response. After the optical pulse ends, the power of the preceding optical pulse can be determined by how much the liquid layer deforms. As mentioned earlier, in contrast to conventional computing methods, the nonlinear response and the memory are located in the same spatial region, which raises the possibility of a compact (beyond von-Neumann) design in which the memory and the computational unit are housed in the same area.

The researchers show how memory and nonlinearity can be combined to create “reservoir computing,” which can carry out digital and analog tasks like handwritten image recognition and nonlinear logic gates.

Read More: Researchers Develop New Memcapacitor Device to Boost Neuromorphic Computing Applications

Their model also makes use of the nonlocality property of liquids. Researchers can now forecast compute enhancements that are not conceivable on platforms made of solid-state materials with a finite nonlocal spatial scale. Despite nonlocality, the model falls short of contemporary solid-state optics-based reservoir computing systems. Nonetheless, the research provides a clear road map for future experimental research in neuromorphic computing seeking to test the predicted effects and investigate complex coupling mechanisms of diverse physical processes in a liquid environment for computation.

Using multiphysics simulations, the researchers predicted various unique nonlinear and nonlocal optical phenomena by investigating the interaction between light, fluid dynamics, heat transfer, and surface tension effects. They take it one step further by showing how they may be applied to construct adaptable, unconventional computational systems. Researchers propose enhancements to state-of-the-art liquid-assisted computation systems by around five orders of magnitude in space and at least two orders of magnitude in speed by using a mature silicon photonics platform.

You can check a YouTube presentation of this research here.