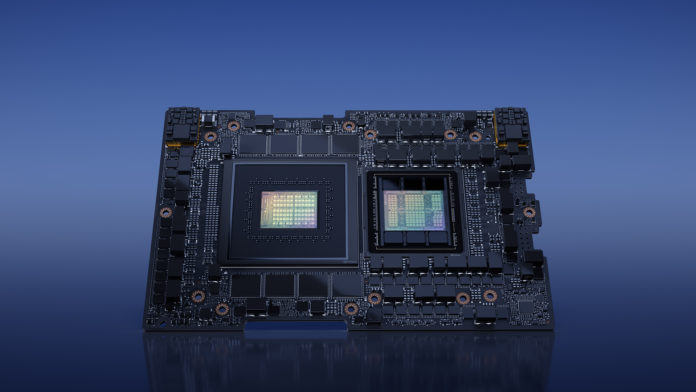

On Tuesday, Nvidia unveiled GH200 Grace Hopper superchip, which is made to run AI models, as it tries to fend off AMD, Google, and Amazon competitors in the budding AI market.

The 72-core Grace CPU and 141 GB of HBM3e memory, which is organized into six 24 GB stacks and has a 6,144-bit memory interface, are the foundation of the new GH200 Grace Hopper superchip. Although Nvidia installs 144 GB of memory physically, only 141 GB are usable for higher yields.

The California-based company said that the HBM3e processor, which is 50% faster than current HBM3 technology, will power its next-generation GH200 Grace Hopper platform.

Developers will be able to run expanded Large Language Models (LLMs) as a result of its dual configuration, which will provide up to 3.5x more memory capacity and 3x more bandwidth than the currently available chips on the market.

CEO Jensen Huang stated at a presentation on Tuesday that the new technology would help “scale-out of the world’s data centers.” In addition, he predicted that “the inference cost of large language models will drop significantly,” alluding to the generative phase of AI computing that comes after LLM training.

Read More: OpenAI’s Sam Altman Launches Cryptocurrency Project Worldcoin

The latest product launch by Nvidia comes after the hype surrounding AI technology propelled the company’s value above $1 trillion in May. Nvidia became one of the market’s brightest stars in 2023 due to soaring demand for its GPU chips and a forecasted shift in data center infrastructure.

According to estimations, Nvidia currently holds a market share of over 80% for AI chips. Graphics processing units, or GPUs, are the company’s area of expertise. These processors are now the one of choices for the large AI models that support generative AI applications, including Google’s Bard and OpenAI’s ChatGPT.

Despite all this, Nvidia’s chips are hard to come by as tech behemoths, cloud service providers, and startups compete for GPU power to create their own AI models.