A multidisciplinary team of MIT researchers has built a new analog processor that can mimic synapses. The catch is that they have pushed the speed limits to make it synapse 1 million times faster than the human brain in a more energy-efficient manner. They employed a functional ‘inorganic material’ in the fabrication process to enable devices to run faster than the previous version they developed.

The newly developed inorganic material is compatible with standard fabrication techniques and can be integrated into commercial computing hardware like resistors for deep-learning applications. These programmable resistors exponentially increase the speed of training a neural network and fabricating devices even at the nanometer scale.

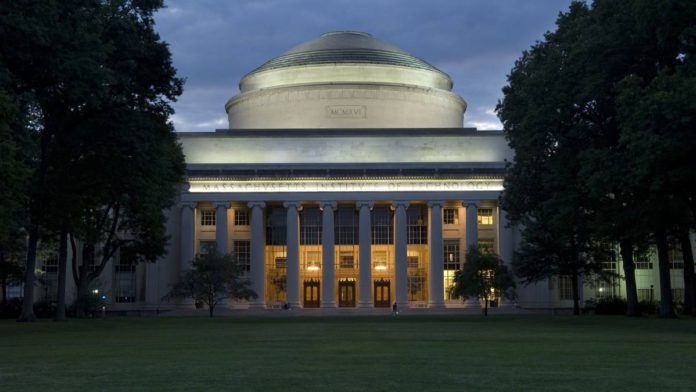

Senior author Jesus A. del Alamo, the Donner Professor in MIT’s Department of Electrical Engineering and Computer Science (EECS), explained that the working mechanism of the device was based on the “electrochemical insertion of the proton into an insulating oxide to modulate its electronic conductivity.”

Read More: Users can create any Microsoft Excel formula with a free AI Bot

Such devices are the essential foundations in analog deep learning. Scientists can create a network of analog artificial “neurons” and “synapses” by repeating arrays of programmable resistors.

Senior author Ju Li, the Battelle Energy Alliance Professor of Nuclear Science and Engineering, said that the potential of action in biological cells rises and falls with a timescale of milliseconds since the voltage difference of about 0.1 volts is constrained by the stability of water. He added, “Here, we apply up to 10 volts across a special solid glass film of nanoscale thickness that conducts protons, without permanently damaging it. And the stronger the field, the faster the ionic devices.”

The future will see more such developments as analog deep learning is quicker and more energy-efficient.