AI translation primarily focuses on written languages. However, around half of the world’s 7,000+ living languages are mainly oral i.e. without a standard or widely used writing system. As a result, it is impossible to build machine translation tools using standard techniques as they require large amounts of written text to train the AI models.

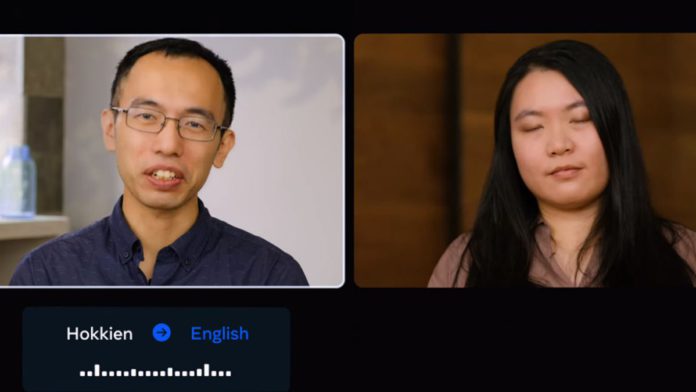

To address this challenge, Meta has built the first-ever AI-powered translation system for a primarily oral language, Hokkien, which is widely spoken within the Chinese diaspora. Meta’s technology allows Hokkien speakers to converse with English speakers.

The open-sourced AI translation system is part of Meta’s Universal Speech Translator (UST) project. The project is developing new AI methods that will eventually allow real-time speech-to-speech translation for all extant languages. Meta believes that spoken communication can help break down barriers and bring people closer wherever they are. Recently, Zuckerberg announced that the company plans to build a universal language translator for the metaverse.

Read More: Meta AI’s New AI Model Can Translates 200 Languages With Enhanced Quality

Meta’s AI researchers overcame many complex challenges from traditional machine translation systems to develop the new system, including data gathering, evaluation, and model design. Meta is open-sourcing not just their Hokkien translation models but also the evaluation datasets so that others can reproduce and build on their work.

Moreover, the techniques can be extended further to other written and unwritten languages. Meta is also releasing SpeechMatrix, a large corpus of speech-to-speech translations mined with the data mining technique called LASER. Researchers will be able to create their own speech-to-speech translation (S2ST) systems and build on Meta’s work.