Researchers from École Normale Supérieure (ECN) in Paris, Ludwig-Maximilians-Universität München, and the University of California-Riverside developed a huge language model that can respond to philosophical questions in a style that is very identical to that of a particular philosopher.

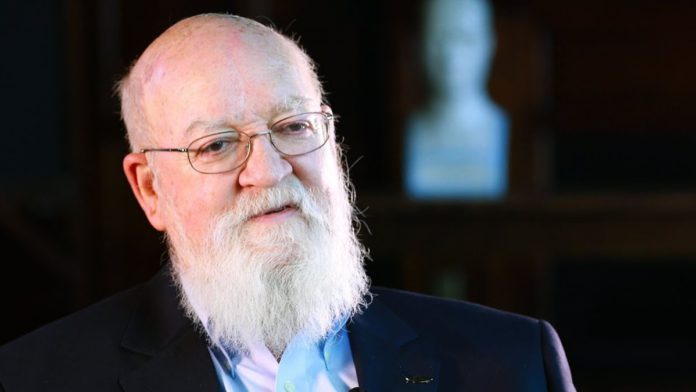

The team used Daniel C. Dennett’s philosophy to improve OpenAI’s GPT-3 language model. It was determined that the model could generate answers that closely resembled those of real philosophers. The researchers improved the GPT-3 model based on Dennett’s earlier writings to make sure it accorded the philosopher’s regular word usage patterns more weight than other word patterns when predicting the subsequent word in a phrase.

By posing questions to their refined model and comparing its responses to those that an actual philosopher could have provided, the researchers hoped to assess its performance. The researchers asked Dennett ten philosophical questions and then posed the same questions to their language model in order to collect four responses for each question without cherry-picking, that is, without necessarily selecting the best answers.

Read More: Microsoft unveils AI office copilot for Microsoft 365

They next tested 425 human users’ ability to distinguish between Dennett’s and the computer’s generated answers to philosophical questions. It was astonishing to learn that knowledgeable philosophers and blog readers could accurately guess Dennett’s comments around half the time.

Conversely, only 20% of average individuals with little to no philosophical training did so. These results suggest that a tuned GPT-3 model can be very close to speaking in a particular philosopher’s voice.