A new study has proposed a deep neural network-based forward design approach that will enable an efficient search for superior materials, which will be far beyond the domain of the initial training set. This novel approach, funded by the National Research Foundation of Korea and KAIST Global Singularity Research Project, will compensate for the weak predictive power of neural networks on an unseen domain by gradually updating neural networks through active transfer learning and data augmentation techniques.

Professor Seungwha Ryu from the Department of Mechanical Engineering believes that this study will address various optimization problems with enormous design configurations. As this proposed framework provided excellent design close to global optima, it was efficient for the grid composite optimization problem. This study was reported in npj Computational Materials last month.

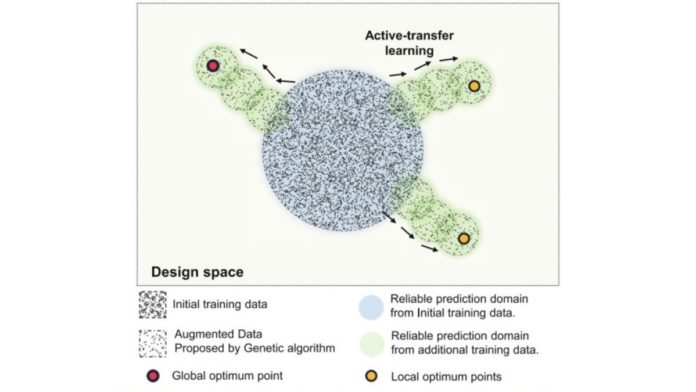

“As neural networks have weak predictive power, the primary intent was to mitigate underlying limitations for the training set of material or structure design,” said Professor Ryu. For a vast design space, neural network-based generative models have been investigated as an inverse design method. However, conventional generative models have limited application because they cannot access data outside the range of training sets. Even the advanced generative models also suffer from weak predictive power for the unseen domain devised to overcome this limitation.

Read More: IIT Mandi Is Hosting Workshop On Deep Learning For Executives And Working Professionals

To overcome the above issues, Professor Ryu’s team, in collaboration with researchers from Professor Grace Gu’s group at UC Berkeley, devised a method that simultaneously expands the strong predictive power of a deep neural network, and identifies optimal design by iterating three key steps:

- Genetic algorithms find a few candidates close to the training set by mixing superior designs with training sets.

- Check the accountability of candidates whether they have improved properties, and expand the training set by duplicating the authenticated designs via a data augmentation method.

- As the expansion proceeds along a relatively narrow but correct path toward the optimal design, the framework enables efficient search. Lastly, expand the reliable prediction domain by updating the neural network with the new superior designs via transfer learning.

Neural network-based techniques are data-hungry models. They become inefficient and suffer from weak predictive power when the optimal configuration of materials and structure lies far from the initial training set. However, if data points lie within and near the domain of the training set, deep neural network models tend to have reliable predictive power. As this method provides an efficient way of gradually expanding the reliable prediction domain, it can handle design problems involving — huge datasets, time-consuming and expensive will be of greater benefit.

Researchers expect this framework to be applicable to a wide range of optimization problems in other science and engineering disciplines with ample design space. This method avoids the risk of being stuck in local minima while providing an effective way of progressively expanding the reliable prediction domain toward the target design.Currently, research teams apply this framework for optimizing design tasks of — metamaterial structures, segmented thermoelectric generators, and optimal sensor distributions. “From these sets of continual studies, we expect to recognize the potential factors of the suggested algorithm. Ultimately, we want to formulate efficient machine learning-based design approaches,” explained Professor Ryu.