OpenAI’s Contrastive Language–Image Pre-training (CLIP) learns image representation from associated natural language. The intuition is to learn how to recognize a wide variety of visual concepts in images and associate them with their names. Thus, no preferential fine-tuning is required for many downstream tasks while benchmarking against the current state-of-the-art.

The researchers from OpenAI did not use curated labeled training data for CLIP. Instead, they obtained training data (400 million images and their captions) from the internet that are highly varied and highly noisy. Hence, it is a complete departure from the prevalent practice of using standard labeled datasets to train computer vision models that specialize in only one task.

The researchers used a simplified version of ConVIRT architecture. To make CLIP efficient, they adopted a contrastive objective for connecting text with images. The training objective was to predict a caption from 32,738 random ones, which is the correct one for a given image. After pre-training, natural language is used to reference learned visual concepts, enabling the model’s zero-shot transfer to downstream tasks.

Also Read: OpenAI Releases Robogym, A Framework To Train Robots In Simulated Environments

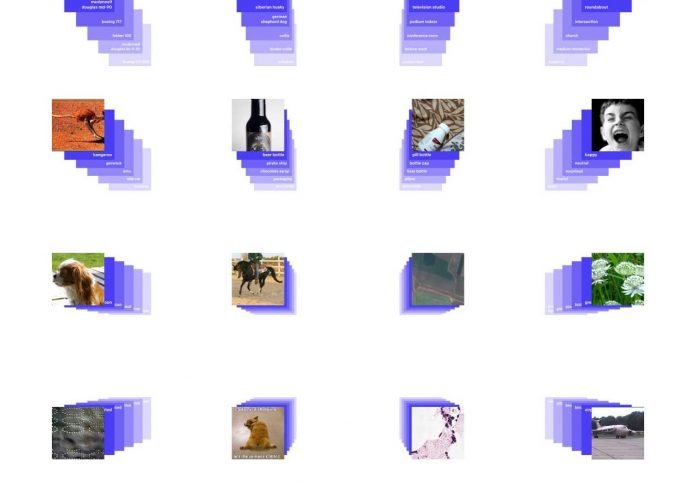

The pre-training distilled down to competitive performance on over 30 different existing computer vision datasets, spanning tasks such as OCR, action recognition in videos, geo-localization, and many types of fine-grained object classification. This result suggests that the zero-shot evaluation of task-agnostic models is much more representative of a model’s capability.

The most crucial part is the robustness of the neural net against adversarial data. Since the model is not directly optimized for the benchmarks, it learns much more rich representations that make it adversarially robust.

Even if the model seems to be versatile, it has the following limitations as reported by the researchers are:

- It struggles with more abstract or systematic tasks such as counting the number of objects in an image and more complex tasks such as predicting the spatial distance

- Zero-shot CLIP struggles with very fine-grained classification, such as telling the difference between car models, variants of aircraft, or flower species

- CLIP poorly generalizes to images absent in its pre-training dataset

- CLIP’s zero-shot classifiers can be sensitive to wording or phrasing and sometimes require trial and error “prompt engineering” to perform well.

To learn more about CLIP, have a look at the paper and the released code.