Deepfakes have been taking over the internet for the past couple of years. Therefore, it is not surprising to learn about the emergence of new ai-generated deepfake media every now and then. Meanwhile, a recent study found that humans are unable to distinguish between real faces and AI-generated faces, indicating how ‘realistic’ deepfakes have grown.

In the last several years, generic AI algorithms have become quite proficient at creating human faces. Computers can already generate images of human faces that nearly resemble real ones thanks to the use of neural networks and deep learning. On the one hand, this might be beneficial because it allows low-budget businesses to make commercials, thus decentralizing access to important resources. Faces created by AI, on the other hand, can be used for fraud, propaganda, disinformation, and even revenge pornography.

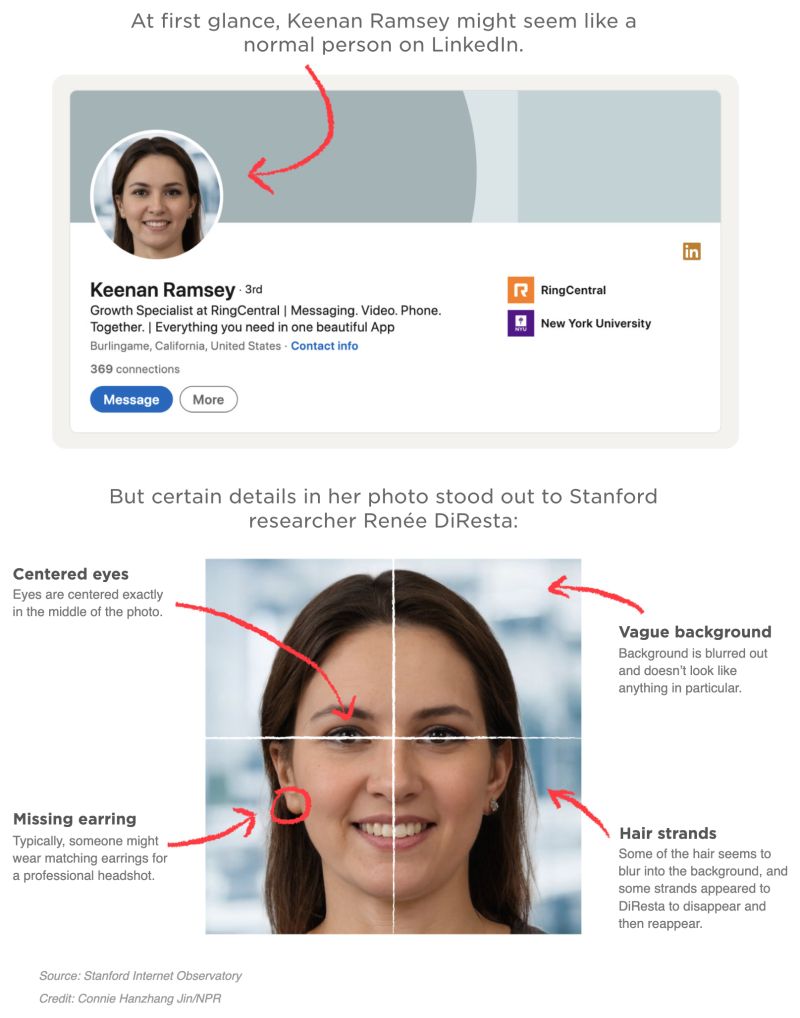

However, in the latest development, more than 1,000 LinkedIn profiles were discovered using facial images that appeared to be AI-generated, according to researchers at Stanford Internet Observatory. One of the main researchers, Renée DiResta, had been approached on Linkedin by someone named Keenan Ramsey with a sales pitch. Apparently, Ramsay had messaged her, “Quick question — have you ever considered or looked into a unified approach to message, video, and phone on any device, anywhere?” Ramsay’s bio said that they had a bachelor’s degree in business administration from New York University.

While DiResta initially thought the LinkedIn message was legitimate, a closed examination of Ramsey’s profile picture caught her off guard. DiResta noticed that Ramsey’s eyes were perfectly aligned in the middle of the picture. The image’s backdrop is frequently severely blurred, with no discernible components such as curtains, bookcases, or other items. Individual strands of hair are also prone to irregularities, with the strand appearing to end in nothing only to resume shortly thereafter. Also, DiResta observed that Ramsay had only one earring which is unusual as women generally wear two earrings, one on each ear. She even claims to hold a bachelor’s degree in business administration from New York University and lists CNN, Unilever, Amazon, and philanthropist Melinda French Gates among her interests.

This motivated her to start looking into the frequency of AI-generated – or deepfake – images on LinkedIn accounts along with her colleague Josh Goldstein.

According to NPR, many of these phony identities are being employed as a marketing tactic to generate interest in legitimate businesses. An actual individual is contacted via a false profile, and if they show interest, a real salesman takes over the conversation. Over 70 businesses were mentioned on these 1,000 fictitious profiles, with several of them informing NPR that they recruited outside marketers to increase sales. It is believed that one of the incentives behind creating false profiles could be to get past LinkedIn’s organic messaging volume constraints.

Most of the fake profiles had job position titles like ‘Business Development Manager’, ‘Sales Development Executive’, ‘Growth Manager’, and ‘Demand Creation Specialist’ for top companies. However, during the investigation, none of the companies mentioned having any record of the said ‘individuals.’ NPR reached out to 28 colleges to inquire about 57 of the profiles. None of the claimed graduates were found in any of the 21 schools that replied.

Fake accounts are a common way to propagate certain messages, dupe individuals, and create attention around specific issues. Countries like China and Saudi Arabia have already been reported to have been resorting to such tactics for various reasons.

In the case of LinkedIn, it appears that fake profiles are being utilized to build attention about certain businesses. AirSales is one of these vendors, and it claims to recruit independent freelancers to perform marketing services, and that these contractors are free to create LinkedIn accounts “at their own discretion.”

According to AirSales CEO Jeremy Camilloni, “to my knowledge, there are no explicit rules for profile pictures or the use of avatars. This is really rather prevalent among LinkedIn’s tech users.”

RingCentral, one of the firms that appeared to be employing the fake accounts (including Ramsay’s), declined to comment on its involvement in the usage of AI-generated deepfakes. However, the company did mention that it has no record of Ramsey as an employee.

The popularity of websites like ThisPersonDoesNotExist.com demonstrates that using GANs (generative adversarial networks) to build deepfakes has become very simple in recent years. These networks function by sifting through massive amounts of data (in this example, a large number of photos of actual people), learning patterns, and then attempting to duplicate what they’ve discovered.

The fact that GANs test themselves is one of the reasons they are so good. Faces are generated by one component of the network (generator), which is then compared to the training data by the other (discriminator). The generator’s task is to convince the discriminator that the pictures are authentic. As the discriminator improves at distinguishing AI-generated images, the generator creates increasingly realistic images to deceive the discriminator. Think of it like a pastry chef attempting to create a new dish and unwilling to give up until the recipe is perfected.

Although users might simply create a LinkedIn account using stock photographs or random social network photos, using an AI to create a deepfake provides an extra degree of security. Because each image is unique, it cannot be traced back to a source for simple refutation via a reverse image search.

These LinkedIn profile pictures created by deepfake software highlight how a technology that has been exploited to spread disinformation and hate online has made its way into the corporate environment. According to a community report on LinkedIn’s transparency page, the company removed more than 15 million fake accounts in the first half of 2021, with automatic defenses stopping the majority of them. Following the Stanford researchers’ warnings, LinkedIn said it investigated and deleted those profiles that violated its regulations, including those with falsified information. LinkedIn did not provide any information regarding the investigation process.

Read More: Misinformation due to Deepfakes: Are we close to finding a solution?

This isn’t the first time an AI-faced bot network has appeared on social media. Multiple identities purportedly belonging to Amazon warehouse employees were removed from Twitter in 2021, with many of their profile images appearing to be generated by deepfake AI tools. Amazon denied any connection to the personas or their tweets, as well as any responsibility for them.

Instead of attempting to make a less-than-direct sale, Twitter bots from Amazon and other sources often spread disinformation and propaganda on behalf of companies and governments.

Though the creation of fake profiles using AI-generated deepfake images does not sound alarming, it is a precursor of the chaos deepfakes can inflict in the future. At the same time, nations like China are already taking measures to regulate the creation and spread of deepfakes over the internet and other mainstream media. In January, China’s internet watchdog the Cyberspace Administration of China (CAC) announced new guidelines for content providers that modify face and voice data, in the country’s fight against “deepfakes.”