Facebook AI Research (FAIR) announced the launch of Habitat 3.0, Habitat Synthetic Scenes Dataset (HSSD-200), and HomeRobot, giving AI-powered assistants a whole new level of meaning. Meta aims to develop socially intelligent robots that adapt to human preferences and assist with everyday tasks, underscoring the critical role of embedded systems in shaping the future of AR and VR experiences.

These tools are launched to overcome all the challenges that usual AI-powered assistants face, including scalability, standardized benchmarking, collaborative robotics, and safety concerns.

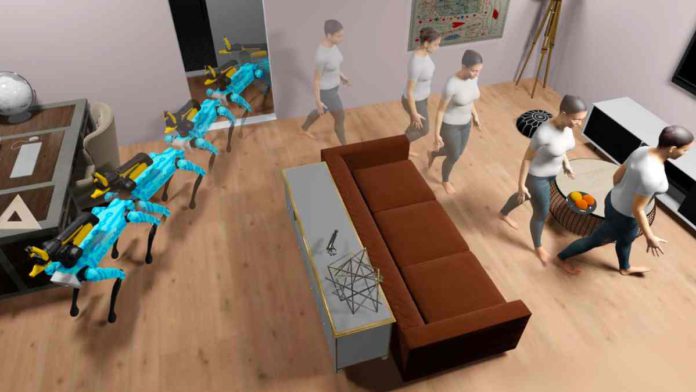

FAIR’s Habitat 3.0 enables large-scale training for human-robot interaction in realistic indoor environments. AI agents trained using these simulations can demonstrate collaborative behaviors, from navigating narrow corridors to efficiently dividing tasks.

Read more: Deepmind’s New ML Model, Unisim, Simulates Reality to Train Robots.

And, the introduction of the HSSD-200 addresses critical issues in training robots in simulated environments. It offers highly detailed 3D environments, fine-grained semantic categorization, and efficient asset compression. FAIR’s experiments have shown that HSSD-200’s smaller yet higher-quality dataset can lead to AI agents with comparable or superior performance in real-world scenes.

To help things up in collaborative robotics, FAIR built HomeRobots, a software stack for physical and simulated autonomous manipulation. It consists of benchmarks, baseline behaviors, and interfaces that focus on tasks like delivering requested objects.

Habitat 3.0, HSSD-200, and HomeRobots represent a significant step toward developing socially embodied AI agents to collaborate with and assist humans in their everyday lives. The work of FAIR can potentially bridge the gap between simulation and the physical world, opening new frontiers in human-robot collaboration and interaction.