OpenAI has released Shap-E, a 3D asset conditional generative model. According to the paper, Shape-E can directly generate the parameters of implicit functions that can be rendered as both textured meshes and neural radiance fields (NeRF) with a single text prompt, in contrast to conventional 3D generative models that provide a single output representation.

Shap-E is one of the few OpenAI products that is available as open source, and it can be found on GitHub together with the model weights, inference code, and an example.

The paper claims that Shap-E receives training in two phases. First, an encoder that deterministically maps 3D assets into the parameters of an implicit function is trained. Second, using the encoder’s data, a conditional diffusion model is trained.

Read More: OpenAI Closes $300 Million Funding Round Between $27-$29 billion Valuation

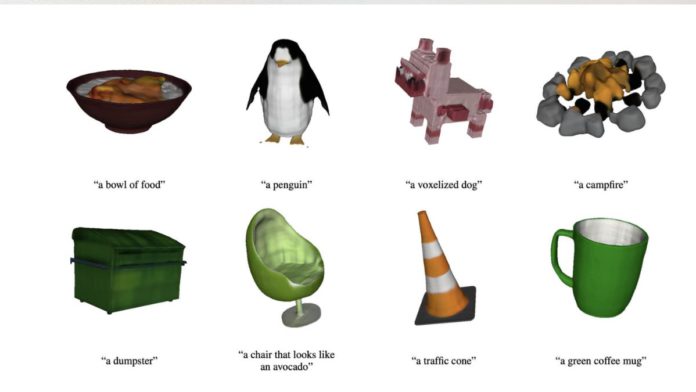

The research states that when trained on a sizable dataset of linked 3D and text data, “our models can generate complex and diverse 3D assets in just a few seconds.”

The intriguing thing about OpenAI’s Shap-E is that it converges more quickly and generates samples with an equivalent or greater quality than Point-E, despite modeling a higher-dimensional, multi-representation output space.

Even though the 3D objects that are produced could appear pixelated and rough, the models can be built using just one sentence. Another drawback of this is that, as the study points out, it currently struggles to locate numerous attributes and can only produce objects with single object prompts and basic attributes.