Computer Science Professor Ben Zhao at the University of Chicago has developed “Nightshade,” an online tool that can bring a watershed moment in the AI-Art landscape.

The ongoing predicament between artists and AI companies is well known. Whether it be the hollywood writer strike, or the continuing mounting of lawsuits filed against generative AI companies by painters, musicians, etc, for unlawfully scrapping their material from the internet to train AI models is shedding light on the subject’s intricacies.

The question is, how would Nightshade prevent these AI giants from scraping data from the internet? The answer is not in preventing but in poisoning. Nightshade joins the party when an artist uploads their work online but doesn’t want it to be scrapped by AI companies without permission or royalties.

But the question is, what Nightshade specifically do? The AI tool alters the information related to a particular image, eventually making the image-generating AI models learn the wrong names of things they are looking at. This concept is called “data poisoning.”

Read More: AI-Generated Art Cannot be Copyrighted, Rules US Court

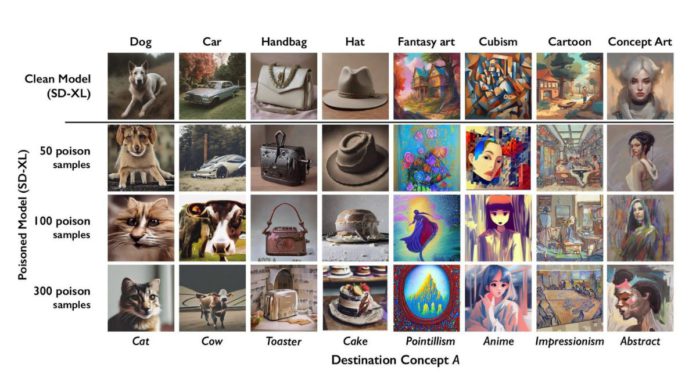

Ben Zhao and his team poisoned images of dogs by embedding information in the pixels to deceive an AI model into perceiving them as cats. In their experiment, the researchers put this attack to the test on Stable Diffusion’s latest models. By positioning just 50 images of dogs and then instructing Stable Diffusion to generate dog images, the results turned unusual, producing creatures with excessive limbs and cartoonish features. When the number of poisoned samples increased to 300, an attacker could successfully coerce Stable Diffusion into generating dog images that resembled cats.

Furthermore, Nightshade’s method of poisoning data poses a formidable challenge in terms of defense. This is because it necessitates that developers of AI models meticulously identify and remove images containing altered pixels. These pixels are intentionally designed to be inconspicuous to the human eye and may prove challenging even for data-scrapping software tools to detect.

The team plans to release Nightshade as an open-source tool, enabling others to experiment and create their own variations. Zhao explains that the tool’s potency increases as more people utilize it as large AI models rely on datasets that can encompass billions of images; the more poisoned images that find their way into the model, the more significant the potential impact of this technique.

So next time, all an artist needs to do before uploading an image online is to use Nightshade and poison the material so that AI models like DALLE and Midjourney can’t identify the true nature of the picture, eventually making the data deceptive and problematic for the system.