University of Hong Kong has introduced Text2NeRF, a text-driven 3D scene synthesis system that combines the Neural Radiance Field (NeRF) and the best characteristics of a trained text-to-image diffusion model.

Researchers picked NeRF as the 3D representation because of its superiority in modeling fine-grained and lifelike characteristics in a variety of circumstances, which may significantly reduce the artifacts generated by a triangular mesh. They replaced older methods like DreamFusion, which used semantic priors to govern the 3D creation, with finer-grained picture priors inferred from the diffusion model.

Because of this, Text2NeRF can generate realistic texture and delicate geometric shapes in 3D scenes. A pre-trained text-to-image diffusion model is used as the image-level prior, and they constrain the NeRF optimization from scratch without the requirement for additional 3D supervision or multiview training data.

Read More: Microsoft Announces AI Personal Assistant Windows Copilot for Windows 11

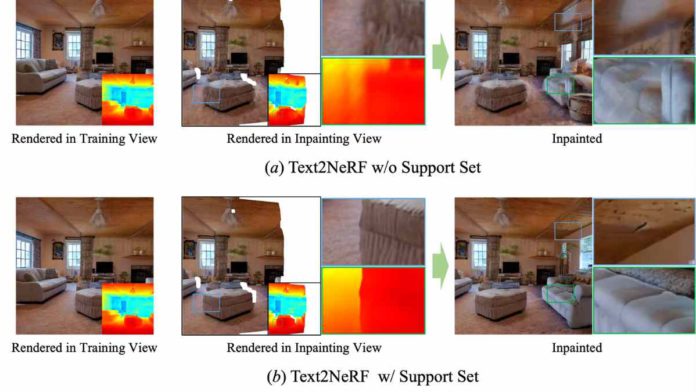

Priors for depth and content are used to optimize the NeRF representation’s parameters. To be more explicit, they build a text-related picture as the content prior using a diffusion model and a monocular depth estimation approach to offer the geometric prior of the constructed scene. In order to guarantee consistency across numerous viewpoints, they also recommend a progressive inpainting and updating technique (PIU) for the unique view synthesis of the 3D scene.

Text2NeRF developed a variety of 3D settings, including artistic, indoor, and outdoor scenes, due to the method’s universality. Text2NeRF can also create 360-degree views and is not limited by the view range. Their Text2NeRF performs qualitatively and statistically better than the preceding approaches, according to numerous tests.