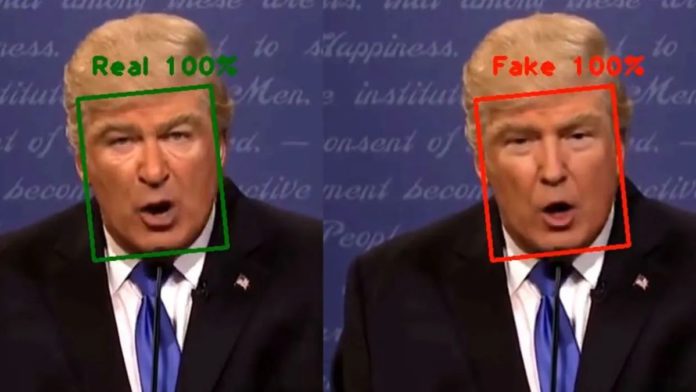

Every day we come across headlines that highlight how government bodies and privacy experts air their concerns about the misuse of deepfakes. For instance, in public, Barack Obama never called Donald Trump a complete dipshit, but a YouTube video alleges otherwise. Actor Tom Cruise never had a solo dance-off, yet last year, many TikTok users saw a video on the platform where a deepfake version of Tom Cruise was doing dance-offs and performing magic tricks. These are benign examples of how deepfakes are taking over the world with its misleading and manipulative side. To make things worse, a recent study published in the Proceedings of the National Academy of Sciences USA also sheds light on how humans are finding generated images to be more realistic than the actual ones.

Dr. Sophie Nightingale of Lancaster University and Professor Hany Farid of the University of California, Berkeley, performed experiments in which participants were asked to tell the difference between state-of-the-art StyleGAN2 generated faces and real faces, as well as the level of trust the faces evoked. The findings showed that synthetically generated faces are highly realistic and practically indistinguishable from real faces and that they are perceived as more trustworthy than the latter.

For their experiment, the researchers’ team recruited 315 untrained and 219 trained participants on a crowdsourcing website to study whether they could distinguish a selection of 400 fake photos from 400 photographs of real people. Each set has 100 participants from four different ethnic groups: white, black, East Asian, and South Asian. They also recruited another 223 volunteers to judge the level of trustworthiness of a group of the same faces on a scale of 1 (very untrustworthy) to 7 (very trustworthy).

In the first experiment, 315 people were able to classify 128 faces from a total of 800 as either real or fake. Their accuracy percentage was 48 percent, which was close to a 50 percent chance performance. In a subsequent experiment, 219 new volunteers were instructed and provided feedback on how to identify faces. They classified 128 faces from the same collection of 800 faces as in the previous trial, but the accuracy rate only increased to 59 percent despite their training.

The researchers next set out to see if people’s perceptions of trustworthiness might aid in the detection of fake images. Therefore, the third set of participants was asked to rate the trustworthiness of the 128 faces taken from the first experiment. It was observed that the average rating for synthetic faces was 7.7 percent more trustworthy than the average rating for actual faces, a statistically significant difference. On the brighter side, apart from a modest tendency for respondents to assess Black faces as more trustworthy than South Asian faces, there was little difference between ethnic groupings.

The researchers were surprised by their observations. According to Nightingale, “We initially thought that the synthetic faces would be less trustworthy than the real faces.” Nightingale emphasizes the need for stricter ethical rules and a stronger legal framework in place as there will always be those who want to exploit deepfake images for malicious purposes, which is concerning.

Read More: Misinformation due to Deepfakes: Are we close to finding a solution?

Deepfakes, which is the portmanteau of words “deep learning” and “fake,” initially appeared on the Internet in late 2017, powered by generative adversarial networks (GANs), an exciting new deep learning technology. In layman’s words, in GAN, two deep learning algorithms are pitted against each other. The first algorithm, called generator is fed with random data to generate a synthetic image. This synthetic image is then mixed with noise (authentic photos of people) when fed to the second algorithm called discriminator, which compares them to the training data.

A GAN pits two artificial intelligence algorithms against each other. The first algorithm, known as the generator, is fed random noise and turns it into an image. This synthetic image is then added to a stream of real images – say, of celebrities– that are fed into the second algorithm, known as the discriminator. If it can discern the difference, the generator is made to repeat the process. After numerous iterations, the generator starts producing utterly realistic faces of completely nonexistent persons.

Earlier neural networks were only capable of detecting an image or object in videos, but with the introduction of GANs, now they can generate their own content. Further, while impressive, initially, deepfake technology was still not nearly up to pace with actual video footage and could be easily detected by examining attentively. However, as technology advances at a breakneck speed, deepfakes have slowly become indistinguishable to the human eye from actual photographs.

Not only deepfakes are a blessing to purveyors of fake news, but it is also a national security threat and medium to produce content without consent (deepfake pornography). Though misinformation existed before, the advent of deepfake accelerates the volume by whopping numbers. The above-cited example about Barack Obama is just the tip of the iceberg of how deepfake can brainwash people by mere footage of doctored clips. In less than a decade, deepfakes advanced its reputation from internet sensation to notorious breeders of identity and security threats. For example, in Gabon, a deepfake video sparked an attempted military coup in the East African country.

Acknowledging the ramifications, several nations have started taking measures to stop misinformation by deepfakes and regulate their generation by companies. Recently, the Cyberspace Administration of China has proposed a draft that promises to regulate technologies that generate or manipulate text, images, audio, or video using deep learning. The US state of Texas has prohibited deepfake videos designed to sway political elections. Although these are just the preliminary actions against artificially generated fake media, it is high time that governing bodies also come up with plans to tackle new forms of deepfake content, especially ones where humans fail to distinguish real from fake.