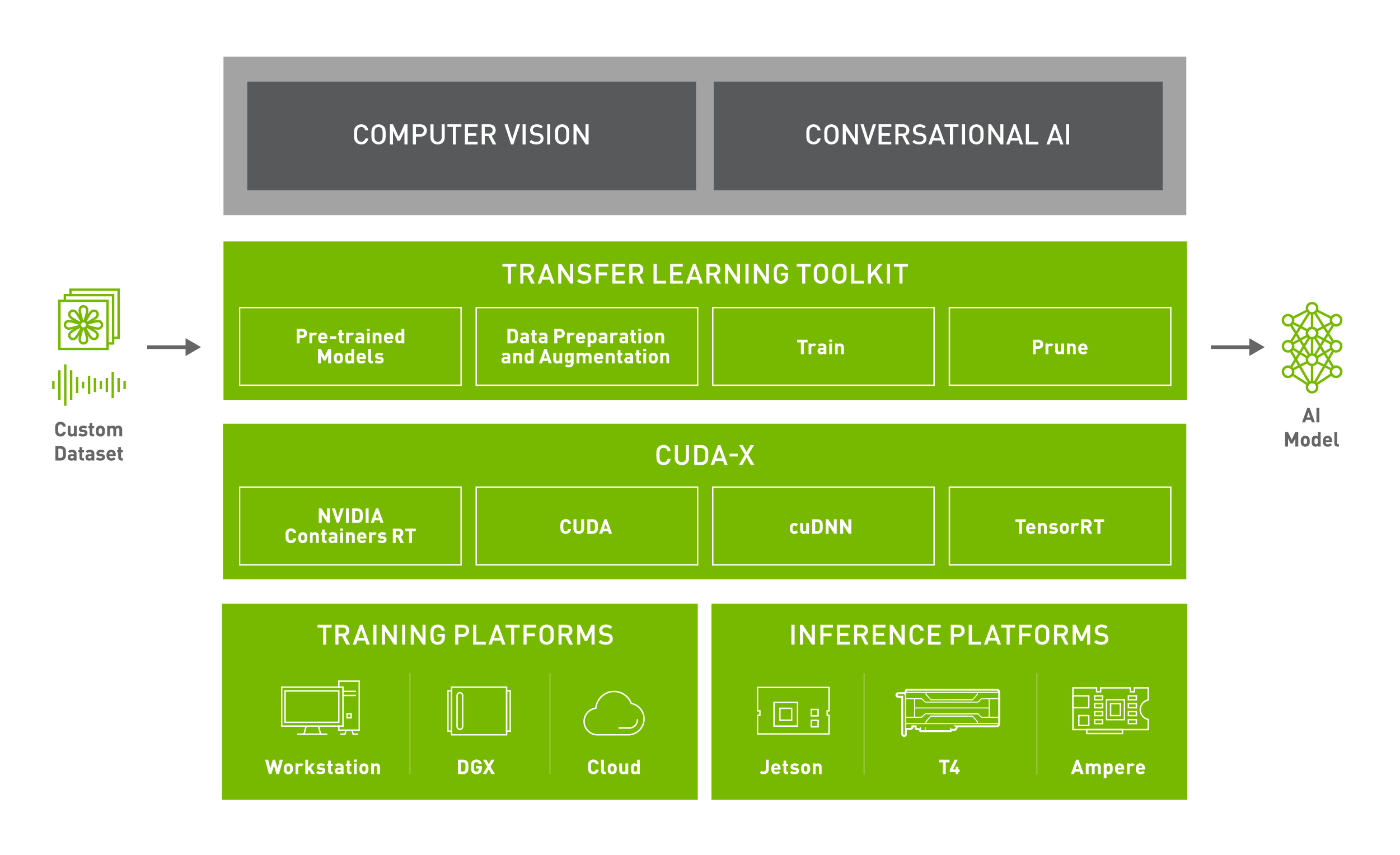

The largest GPU provider releases NVIDIA Transfer Learning Toolkit 3.0 to help professionals build production-quality computer vision and conversational AI models without coding. As the name suggests, the toolkit leverages the transfer learning technique — a method where a deep learning model transfers its learning to another model to further improve the dexterity of the newer models.

To develop a deep learning model, data scientists need superior computation, large-scale data collection, labeling, statistics, maths, model development expertise, and more. This impedes practitioners from quickly developing machine learning models and bring them to the market. Consequently, NVIDIA rolls out the Transfer Learning Toolkit 3.0 to eliminate the need for a wide range of expertise to build exceptional models.

Data scientists can now develop models by just fine-tuning the pre-trained models from the NVIDIA Transfer, thereby building models even without having a knowledge of artificial intelligence frameworks. According to the company, the NVIDIA Transfer Learning Toolkit (TLT) can expedite the engineering efforts by 10x. In other words, deep learning models that usually take 80 weeks while building from the ground up, with NVIDIA TLT, development can be carried out in 8 weeks.

These pre-build models are available for free and can be accessed from NGC to develop common computer vision and conversational AI models like people detection, text recognition, image classification, license plate detection and recognition, vehicle detection and classification, facial landmark, heart rate estimation, and more.

All you have to do to get started is pull the NVIDIA Transfer Learning Toolkit container from NGC, which comes pre-packaged with Jupyter Notebooks, to build state-of-the-art models. Although the Toolkit is available for commercial use, you should check for the specific terms of license of models.