Microsoft announced a new text-to-speech AI model called VALL-E on Thursday that can simulate a person’s voice closely when given a three-second audio sample.

Once the model learns a specific voice, it can synthesize audio of that same person saying anything and preserves the speaker’s emotional tone. Its creators claim that VALL-E could be used for high-quality text-to-speech applications and audio content creation when brought together with other generative AI models like GPT-3.

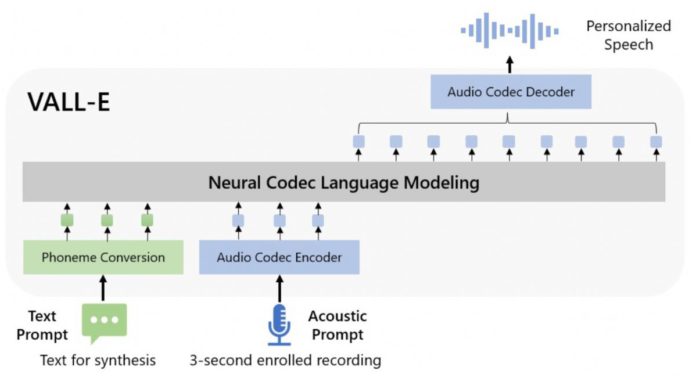

Microsoft describes VALL-E as a neural codec language model which builds on a technology called EnCodec. Unlike other text-to-speech methods that usually synthesize speech by manipulating waveforms, VALL-E creates discrete audio codec codes from text and acoustic prompts using EnCodec.

Read More: Minnesota Startup Claims To Have Made World’s First AI-Generated Online Course

It basically processes how a person sounds, breaks that information into discrete components called tokens, and uses training data to know how that voice would sound in other phrases outside of the three-second sample.

Microsoft trained VALL-E’s speech synthesis system on an audio library called LibriLight, which Meta assembled. It contains about 60,000 hours of English language speeches from over 7,000 speakers, mainly from LibriVox public domain audiobooks. For VALL-E to create a good result, the voice in the sample must closely match a voice in the training data.