In the latest updated release of CUDA 11.3, a software development platform for building GPU-accelerated AI-based applications, NVIDIA adds direct Python support.

Now, users can build applications without relying on third-party libraries or frameworks to work with CUDA using Python. Over the years, data scientists used to leverage libraries like TensorFlow, PyTorch, CuPy, Sckit-CUDA, RAPIDS, and more to use CUDA with Python programming language.

Since these libraries had their own interoperability layer between the CUDA API and Python, data scientists’ had to remember different workflow structures. However, with the support of Python for CUDA, the development of data-based applications with NVIDIA GPUs will become a lot easier. CUDA Python is also compatible with NVIDIA Nsight Compute, allowing data scientists to gain kernel insights for performance optimization.

Also Read: NVIDIA GTC 2021 Top Announcements

As Python is an interpreted language and working with parallel programming requires low-level programming, NVIDIA ensured interoperability with Driver API and NVRTC. As a result, you will still be required to write the Kernel code in C++, but this release can be the beginning of complete interoperability in the future.

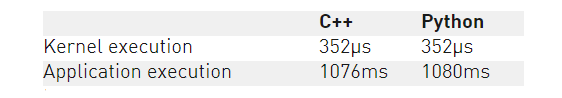

On various performance tests, NVIDIA determined that the performance of CUDA Python is similar to that of working with CUDA using C++.

NVIDIA, in upcoming releases, will introduce the source code on GitHub or package through PIP and Conda to simplify the access to Cuday Python.

With CUDA 11.3, NVIDIA releases several other enhancements for developers using C++ and improved CUDA API for CUDA Graphs. The full list of the release can be accessed here.