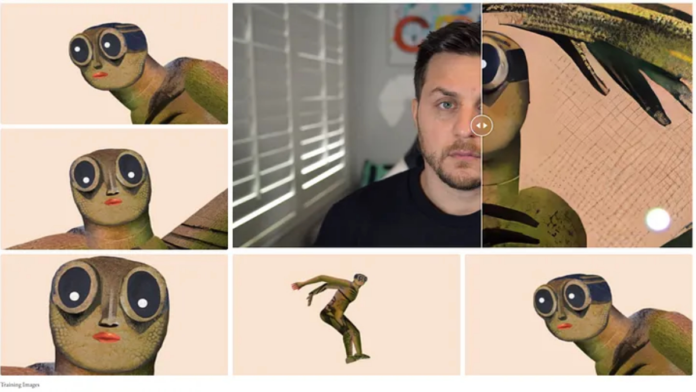

A team of researchers from various institutions has recently developed a structure and content-guided video diffusion model that allows users to edit videos based on visual or textual descriptions of the desired output.

The video diffusion model is trained on the images and videos jointly, which provides direct control of temporal consistency. It is achieved via a novel guidance method that exposes the user to acquire more significant control over the output characteristics.

Read more: China to Launch a New Blockchain Research Hub in Beijing

The main challenge with this diffusion model is maintaining a balance between structure and content. Researchers have solved this problem by training the model on monocular depth estimates with varying levels of detail. The results of the researcher’s experiment are impressive, with fine gained control over output and the ability to customize based on a few reference images.

The structure and content-guided video diffusion model developed by the researchers is a significant step forward in video editing. It allows users to customize and control output characteristics while maintaining a balance between structure and content. The model can significantly impact the world of video production and editing.