Meta’s SAM Audio is a big swing at turning audio into a “segment anything” problem – and it could reshape how creators, advertisers, and even surveillance systems handle sound in the next few years. This isn’t just another flashy AI demo; it is a strategic signal about where multimodal foundation models are headed and how quickly audio is catching up with vision.

What Exactly is SAM Audio?

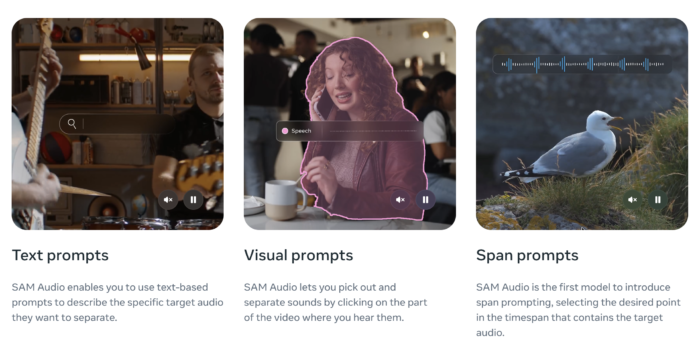

SAM Audio is Meta’s new unified multimodal AI model that can isolate “any sound in anything” using simple prompts across text, time spans, and visuals. Think of it as the audio equivalent of the original Segment Anything Model for images, but tuned to separate voices, instruments, background noise, and arbitrary sound events from messy real-world audio mixtures.

Under the hood, SAM Audio uses a Perception Encoder Audiovisual (PE-AV) engine built on Meta’s Perception Foundry model, letting it understand sound from multiple cues and then surgically carve it out without degrading the rest of the track. Meta is positioning this as a “first unified multimodal model for audio separation,” meaning one model, many domains (speech, music, SFX) and many prompt types, instead of today’s fragmented task-specific tools.

Why This is a Step Change, Not a Feature

Traditional audio editing is still painfully manual: selecting spectral blobs in DAWs, juggling plugins, or using one-off denoisers that only work in narrow scenarios. SAM Audio replaces that workflow with natural prompts like “remove crowd noise,” “solo the guitar,” or “mute barking dogs while keeping traffic sounds.” This is not just usability sugar; it abstracts away low-level audio engineering into high-level semantic intent, just as image models abstract away masks and polygons into “remove the person in the background.”

Performance benchmarks indicate SAM Audio hits state-of-the-art separation quality across domains and even runs faster than real time (reported real-time factor around 0.7 across 500M–3B parameters), which matters if this is going to live inside creator tools, live production, or consumer apps. The model also benefits from mixed-modality prompting – combining, say, a text description with a time span – which consistently outperforms single-modality inputs and hints at where practical workflows will converge.

Strategic Bets: Multimodality and “Anything Models”

SAM Audio fits neatly into Meta’s broader Segment Anything family (SAM for images, SAM 3D, and now audio), and the pattern is clear: turn messy, continuous real-world data into discrete, controllable segments via prompts. This is less about cool demos and more about building a foundation layer for future AR, VR, and mixed-reality experiences where you must isolate people, objects, and sounds on the fly.

From a research and ecosystem standpoint, Meta has open-sourced SAM Audio via its GitHub repository and exposed it via the Segment Anything Playground, which will accelerate experimentation and downstream products in audio-visual segmentation, scene understanding, and generative tooling. For startups, that means the moat won’t come from building the base separator, but from product, UX, proprietary data, and tight integrations into vertical workflows.

Use Cases: From YouTube Creators to Call Centers

The obvious early winners are content creators and post-production teams. With SAM Audio-style tooling, a solo YouTuber or podcaster can achieve studio-grade isolation of dialogue, remove location noise, and create alternate audio stems for shorts, reels, and multilingual dubbing without touching a traditional DAW. Music producers can isolate instruments from live recordings, experiment with arrangements, or remix legacy catalogs that were never multitracked – all from mixed stereo audio.

Enterprise use cases are equally interesting. Contact centers can separate overlapping speakers and background noise for cleaner transcription, analytics, and QA; media monitoring tools can track specific sound events in large audio-visual corpora; and safety applications can detect critical sounds (sirens, alarms, glass breaking) in multi-source environments. When you add the visual prompting capability – clicking on a sounding object in video to isolate its audio – you effectively get a bridge between computer vision and scene-aware acoustics.

The Uncomfortable Edge: Privacy, Deepfakes, and Surveillance

Like every strong foundation model, SAM Audio also sharpens the knife’s edge of misuse. High-precision voice and sound separation can make it easier to reconstruct clean voiceprints, feeding into more convincing deepfake pipelines or voice-cloning fraud. In parallel, combining SAM Audio with large-scale sensor networks and video analytics could supercharge ambient surveillance, enabling persistent tracking of individuals or events across cities using both sight and sound.

There is also a long-tail privacy risk for everyday users: background chatter in a café, side-conversations in a meeting, or incidental sounds in home videos become more extractable and analyzable than ever before. As with vision models, the core issue is not that SAM Audio exists, but that governance, consent, and policy conversations are lagging far behind the technical capabilities now shipping into consumer-grade tools.

What This Means for Builders and Operators

For product teams, the emergence of SAM Audio reinforces a few critical themes. First, “promptable everything” is becoming table stakes: expect users to increasingly demand natural-language and multimodal control over media, not just sliders and knobs. Second, defensible products in the audio space will need to layer on top of open foundation models with domain-specific interfaces, guardrails, and integrations rather than relying on proprietary separation algorithms alone.

For policy, compliance, and risk leaders, this is the right time to revisit audio data handling: consent frameworks, retention policies, watermarking of synthetic or heavily edited audio, and disclosure norms for AI-assisted edits. The organizations that treat SAM Audio-class models as infrastructure – powerful, neutral, and potentially risky – and invest early in governance will be better positioned than those treating this as a one-off “AI feature” update.

In many ways, SAM Audio is to sound what early object detectors and segmenters were to images: an enabling primitive that quietly unlocks an entire generation of applications. For the AI and analytics ecosystem that Analytics Drift tracks, this release is a reminder that the frontier is no longer just about generating media, but about exerting fine-grained, programmable control over the messy multimodal reality we already live in. As these tools mature and proliferate, the questions around governance, ethics, and responsible deployment will define the winners and shape the next decade of creator tools, enterprise workflows, and AI-driven products.