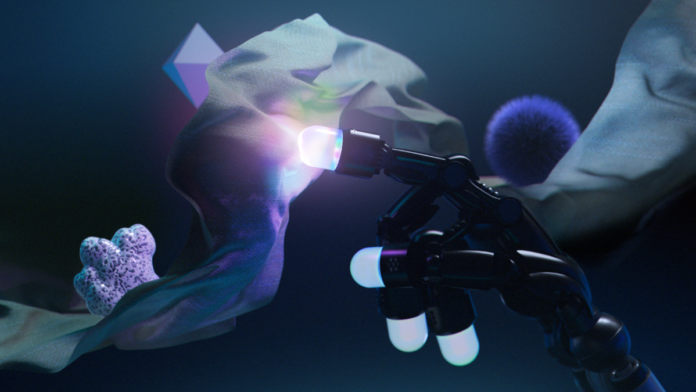

Interacting with the physical world is essential to accomplishing everyday tasks, which come naturally to humans but is a struggle for AI systems. Meta is making strides in embodied AI by developing a robotic hand capable of perceiving and interacting with its surroundings.

Meta’s fundamental AI research team (FAIR) is collaborating with the robotics community to create agents that can safely coexist with humans. They believe it is a crucial step towards advanced machine intelligence.

Meta has released several new research tools to enhance touch perception, dexterity, and human-robot interaction. The first tool is Meta-Sparsh, a general-purpose encoder that operates on multiple sensors. Sparsh can work across many types of vision-based tactical sensors and leverages self-supervised learning, avoiding the need for labels. It consists of a family of models trained on large datasets. In evaluation, Meta researchers found that Sparsh outperforms task and sensor-specific models by an average of over 95% on the benchmark they set.

Meta Digit 360 is another tool within the Meta Fair family. It is a tactile fingertip with human-level multimodal sensing abilities and 18 sensing features. Lastly, Meta Digital Plexus provides a standard hardware-software interface to integrate tactile sensors on a single robotic hand.

Read More: Meta Announces Open-sourcing of Movie Gen Bench

To develop and commercialize these tactile sensing innovations, Meta has partnered with industry leaders, including GelSight Inc. and Wonik Robotics. GelSight will help Meta manufacture and distribute Meta Digit 360, which will be available for purchase next year. In partnership with Wonik Robotics, Meta is poised to create an advanced, dexterous robotic hand that integrates with tactical sensing leveraging Meta Digit Plexus.

Meta believes collaborating across industries is the best way to advance robotics for the greater good. To advance human-robot collaboration, Meta launched the PARTNR benchmark, a standardized framework for evaluating planning and reasoning in human-robot interactions. This benchmark comprises 100,000 natural language processing tasks and supports systematic analysis for LLMs and vision models in real-world scenarios.

Through these initiatives, Meta aims to transform AI models from mere agents into partners capable of effectively interacting with humans.