Computer Vision and Pattern Recognition Conference: NVIDIA has made another attempt to transform still 2D images into 3D objects with AI. The GPU giant uses dubbed 3D MoMa technique for the conversion. 3D MoMa relies on photo measurements taken via photogrammetry and speeds up the process.

The company has been researching neural radiance fields to create 3D scenes from 2D source images. However, the newly unveiled 3D MoMa technology is very different from it.

3D MoMa: The technology uses AI to approximate physical attributes like lighting and geometry in 2D images. Then it reconstructs realistic 3D form objects. Objects made using 3D MoMa are triangle mesh models that can be imported into graphics engines. NVIDIA’s Tensor Coe GPU can be done within an hour. The inverse rendering technique, which unifies computer graphics and computer vision, speeds up the process.

Read More: Salesforce Open-Sources OmniXAI an Explainable AI Machine Learning Library

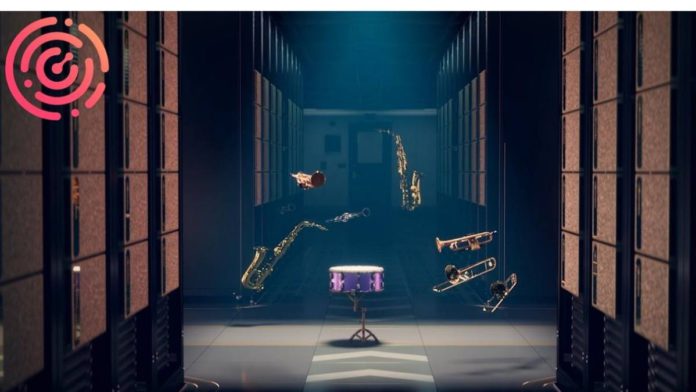

Nvidia’s research and creative teams used 3D MoMa to represent jazz instruments as an example. The team then imported the newly developed models into Nvidia 3D Omniverse and dynamically changed their characteristics.

David Luebke, Vice President of Graphics Research of NVIDIA, said, “inverse rendering is a holy grail unifying computer vision and computer graphics.”

He added, “By formulating every piece of the inverse rendering problem as a GPU-accelerated differentiable component, the NVIDIA 3D MoMa rendering pipeline uses the machinery of modern AI and the raw computational horsepower of NVIDIA GPUs to quickly produce 3D objects that creators can import, edit, and extend without limitation in existing tools.”

3D MoMa is still in the works, but Nvidia believes it will allow game developers and designers to swiftly edit 3D objects and integrate them into any virtual scenario.