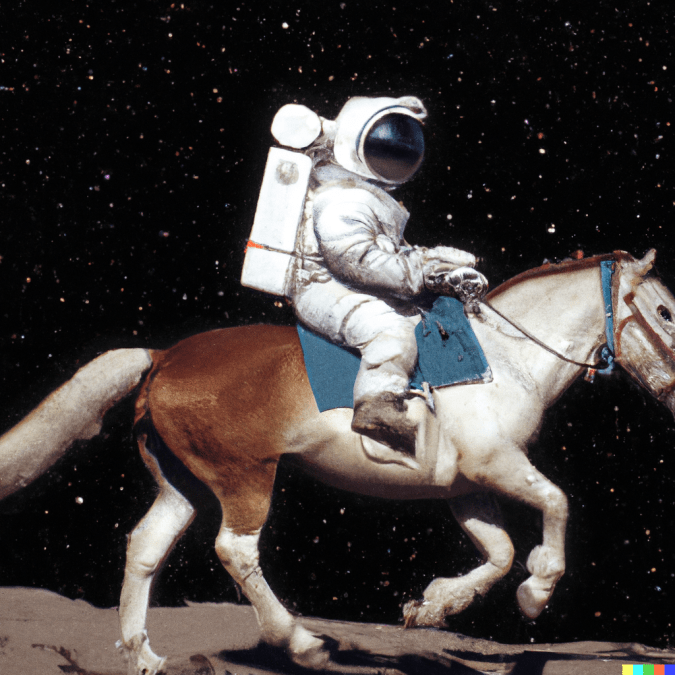

In January 2021, OpenAI launched DALL-E, a 12-billion parameter version of GPT-3 trained to produce pictures from text descriptions using a dataset of text-image pairings. A portmanteau of the artist “Salvador Dalí” and the robot “WALL-E,” DALL-E’s astounding performance was an instant hit in the AI community, and it also received extensive mainstream media coverage. It can also synthesize items that don’t exist in the actual world by combining diverse concepts. Moreover, the DALL-E model can conduct prompt-based image-to-image translation tasks. Recently, OpenAI unveiled DALL-E 2, an upgrade to its text-to-image generator that incorporates a higher-resolution and lower-latency version of the original system.

/cdn.vox-cdn.com/uploads/chorus_image/image/70716637/DALL_E_Teddy_bears_mixing_sparkling_chemicals_as_mad_scientists__steampunk.0.png)

Source: OpenAI

When DALL-E was first announced, OpenAI stated that it will continue to improve the system while looking at possible risks such as picture generation bias and the spread of false information. It was seeking to overcome these challenges with technical precautions and a new content policy, while simultaneously decreasing its computational load and advancing the model’s core capabilities.

Source: OpenAI

DALL-E 2 is based on OpenAI’s CLIP image recognition system, which was created to inspect a given image and summarize its information in a way that people can comprehend. OpenAI iterated on this process to generate “unCLIP,” an inverted version that begins with the description and progresses to an image.

Users can now choose and modify particular portions of existing photographs, as well as add or delete items and their shadows, mash-up two images into a single collage, and create variants of an existing image. Furthermore, the output graphics are 1,024 x 1,024 pixels, rather than the 256 x 256 pixels created by the previous version.

The working of DALL-E 2 is divided into two stages: the first makes a CLIP image embedded with a text caption, and the second generates a picture from it. The results are impressive, and they might have a significant impact on the art and graphic design industries, particularly video game businesses, which hire designers to painstakingly develop worlds and concept concepts.

DALL-E 2 produces images that are several times larger and more detailed than the original. This enhancement is possible due to the transition to a diffusion model, a form of image generation that begins with pure noise and refines the image over time, making it a little more like the image requested until there is no noise left at all.

DALL-E can also generate a smart replacement of a given area in an image. Furthermore, you can provide the system with an example image and it will produce as many versions of it as you like, ranging from extremely near copies to artistic revisions.

Unlike the earlier version, which was open for everyone to play with on the OpenAI website, this new version is now only available for testing by verified partners who are limited in what they may submit or produce with it. They are prohibited from uploading or creating images that are “not G-rated” and “could cause harm,” such as hate symbols, nudity, obscene gestures, or “big conspiracies or events relating to important ongoing geopolitical events.” They must also explain how AI was used to create the images, and they cannot share the images with others via an app or website.

Read More: OpenAI announced Upgraded Version of GPT-3: What’s the catch?

The existing testers are also prohibited from exporting their created works to a third-party platform. However, OpenAI aims to incorporate it into the group’s API toolkit in the future, allowing it to power third-party apps. Meanwhile, if you wish to try DALL-E 2 for yourself, you can sign up for the waitlist on OpenAI’s website.