Meta AI announced the release of Dinov2 two years after debuting DINO, a self-supervised vision transformer model. In contrast to other models of a similar type, like CLIP, this one performs exceptionally well and doesn’t need modifications.

Meta accomplished this by pretraining on a vast volume of unprocessed text utilizing pretext objectives like non-supervised word vectors or language modeling. The model is free source and has been pre-trained on 142 million photos in an unlabeled, self-supervised manner.

High-performance characteristics offered by DINOv2 can be used as direct inputs for basic linear classifiers. Due to its adaptability, DINOv2 may be used to build multifunctional backbones for a variety of computer vision jobs, according to a blog post from the company.

Read More: How Students Can Make The Best Use Of Technology To Enhance Learning Capacities

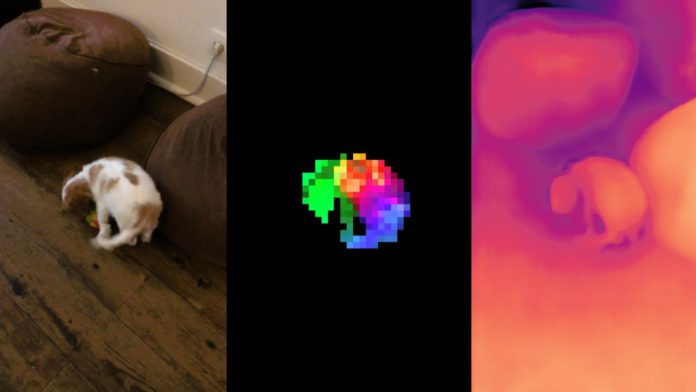

DinoV2 assists with tasks like depth estimation, image classification, semantic segmentation, and image retrieval without the requirement for expensive labelled data, which will save developers a great deal of time and resources.

According to Meta, the model uses self-supervised learning and produces results that are comparable to or better than those produced by the traditional approaches used in the relevant fields.

Self-supervised learning is the key appeal since it enables DINOv2 to create adaptable, general frameworks for a range of computer vision applications and tasks. Before using the model in various domains, no further fine-tuning is necessary.