With the launch of the Robotic Transformer (RT-2), a cutting-edge AI learning model, Google is making a big advancement in improving the intelligence of its robots. By improving on the preceding vision-language-action (VLA) model, RT-2 gives robots a better understanding of visual and linguistic patterns. This helps them to accurately read instructions and determine the best objects to meet particular needs.

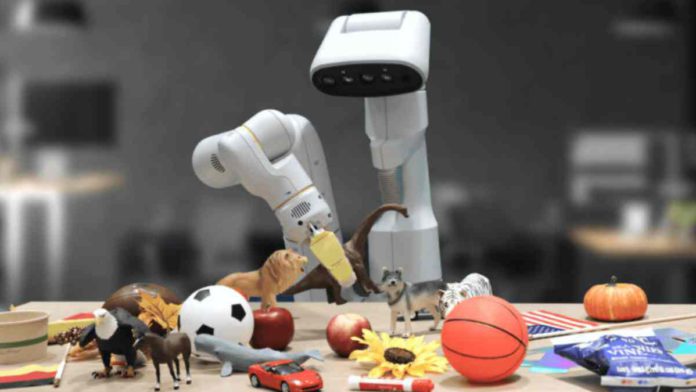

In recent tests, researchers used a robotic arm to test RT-2 in a mock kitchen office environment. The robot was given instructions to recognise a handmade hammer, which turned out to be a rock, and to select a beverage to give a fatigued person, where it selected Red Bull. The researchers also gave the robot instructions to carry a Coke can to a photo of Taylor Swift, which revealed the robot’s unexpected preference for the well-known singer.

RT-2 was trained by Google utilizing a combination of web and robotics data, taking advantage of developments in large language models like Bard, Google’s language model. This combination of linguistic data with robotic expertise, particularly an understanding of how robotic joints should move, turned out to be a successful strategy. Additionally, RT-2 shows competence in comprehending instructions delivered in languages other than English, representing a significant advancement in the cross-lingual capabilities of AI-driven robots.

Read More: Vimeo Introduces AI-Powered Script Generator And Text-Based Video Editor

Teaching robots required laborious and time-consuming individual programming for each distinct activity prior to the development of VLA models like the RT-2. Robots can now draw from a massive database of data, thanks to the strength of these advanced models, allowing them to quickly draw conclusions and make judgements.

Not everything about the new robot is ideal, however. The robot struggled to correctly identify soda flavors in a live presentation that The New York Times covered, and it frequently mistakenly labeled fruit as the color white. These flaws emphasize the continued difficulties in enhancing AI technology for practical applications.